Bonjour tout le monde. This year, the Marketing Automation team at Optimizely got serious about tracking our email A/B tests. In 2015, Optimizely will be taking experience optimization to the next level, and continue to rigorously test campaigns in order to provide the best experience for our customers.

Bonjour tout le monde. This year, the Marketing Automation team at Optimizely got serious about tracking our email A/B tests. In 2015, Optimizely will be taking experience optimization to the next level, and continue to rigorously test campaigns in order to provide the best experience for our customers.

Looking back at some A/B tests we ran this year, here are some lessons learned, along with where I hope to take our email experiments in 2015.

Lesson 1: Creating a solid hypothesis is vital.

A successful email experiment not only allows you to make a decision at that point in time, but it also provides you with a new piece of knowledge that you can apply in future email sends. If the winning variation only applies to the test you just completed, you are missing out on an opportunity to get the most value out from your experiments.

I realized this while pulling the results of a particular test where the variation (FAQ: How long should my test run?) saw a 33.9% increase in clicks.

Subject line test

Control: Are you measuring your tests accurately? Measuring statistical significance of your test

Variation: FAQ: How long should my test run?

Stoked on this result, I went to post the findings in Optiverse, only to realize that I didn’t actually know what had made the variation successful. Did people click on this variation because they liked to read FAQs? Or because it was written in first person? Or something else entirely?

I looked to see what our hypothesis was upon creating the experiment, only to discover that we hadn’t written one.

:/

The first step to creating a solid hypothesis is to actually write it down before you run the test.

*If you are looking for a great resource on constructing a solid hypothesis, check out this blog post by Shana Rusonis. I’ve recommended this post probably 20 times already because it outlines what a foolproof hypothesis looks like, and provides recommendations on how to draw the most insights from your experiments.

So while this particular subject line test was inconclusive, we can always test again to generate clear results.

Let’s dig into this test a little deeper: our goal for this email was to to anticipate questions we often hear from new customers, and provide them with accessible resources. A solid hypothesis for this test could have been: If we pose a question in our subject line that mirrors how our customers think about a testing challenge, then clicks to our resource page will increase.

When thinking about the challenge from an experience optimization perspective, some followup questions arise: how do our customers find answers to questions? Is email the right way to deliver answers to common questions? What kind of customers engage with our resources through email?

Some follow-up test ideas for 2015:

- Target the audience:

- Hypothesis: The click through rate will be higher on FAQ emails for customers who have previously submitted support cases.

- Test resource placement:

- Hypothesis: Adding a FAQ article list in our help center next to the support submission form will decrease support tickets that are related to the FAQ.

These tests will help us understand what is important to our customers, and how to best communicate with them.

Lesson 2: Statistically insignificant experiments can drive business decisions.

Working at a startup, we are constantly vigilant of the balance between adopting new marketing trends and keeping our process lean and dynamic. We are often inspired to try out new products, integrations, and tools to deliver better experiences to our customers. However, every new integration requires training and technical setup, and often means more review time for each email sent. A/B tests are super helpful to gauge whether the new integration/product/ feature is worth the investment.

Back in March, we tested a dynamic email integration (Powerinbox) for our newsletter, that would serve dynamic, personalized content to our contacts. The integration had potential to streamline our monthly newsletter, creating a template that would automatically send the most valuable content to our users dynamically. It would also mean another tool for the team to manage and an added line item in our budget.

We tested our newsletter with Powerinbox and without. The results: there was no statistically significant difference between click-rates. By setting up this test, we were able to make an informed decision on whether to purchase this email integration, and save tons of time implementing a new product into our marketing stack.

Lesson 3: Run experiments broad enough to optimize future emails.

We got a few really interesting takeaways from our tests this year. Here are some of the results!

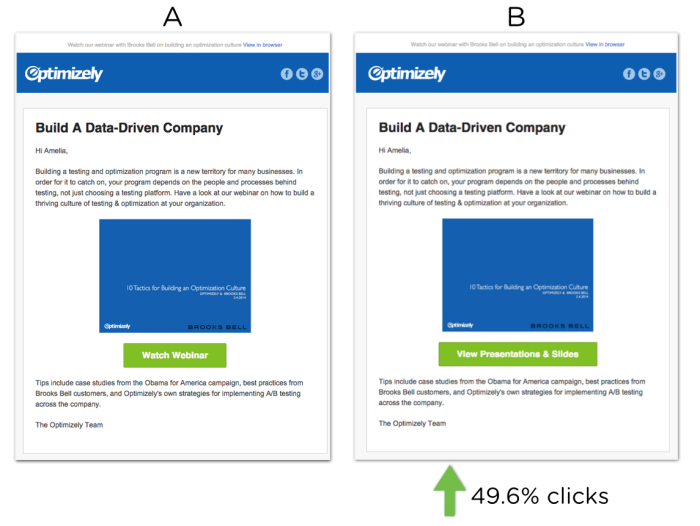

Test 1: Call to Action Button

A: Watch Video | B: View Presentation & Slides

- Audience: Customers

- Goal: Clicks on call to action button

- Result: B increased clicks on CTA by 49.6%.

- Takeaway: Our customers like checking out slides, not just video. We were also able to prove ROE for uploading our webinar slides to Slideshare!

Test 2: Subject Line

A: Why You’re Crazy to Spend on SEM But Not A/B Testing

B: [Ebook] Why You’re Crazy to Spend on SEM But Not A/B Testing

- Audience: Leads

- Goal: Clicks on call to action button

- Result: Subject line B led to a 30% increase in clicks.

- Takeaway: Our lead database likes to know what type of content they are receiving.

Test 3: Subject Line

A: Get Optimizely Certified for 50% Off | B: Get Optimizely Certified & Advance Your Career

- Audience: Customers

- Goal: Opens

- Result: Subject line B led to a 13.3% increase in email opens.

- Takeaway: Our customers love career growth opportunities.

Test 4: Call to Action Button

A: 1 CTA | B: Multiple CTAs

- Audience: Customers

- Goal: Clicks on call to action

- Result: A, 1 call to action, led to a 13.3% increase in clicks.

- Takeaway: One focused CTA is more effective than multiple options. It’s always a great exercise to challenge email best practices for your audience, as industry standards may not always apply.

Test 5: Send Time

A: 7am PT | B: 4pm PT

- Audience: customers

- Goal: clicks

- Result: B, a send time of 4 pm PT increased clicks 8.5%.

- Takeaway: Our customers like open our emails more often in the afternoon. We tested our Product Updates email campaign, sending one variation at 7am PT, and the other at 4pm PT. While this increase was statistically significant, it will be useful to test this in different countries and timezones to make more optimized experiences for our customers worldwide.

- Next steps: Experiment with send times per time zone/country.

Lesson 4: Create meaningful and fearless experiments.

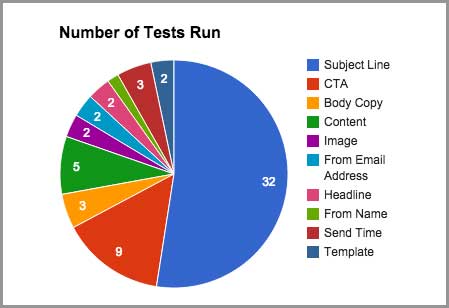

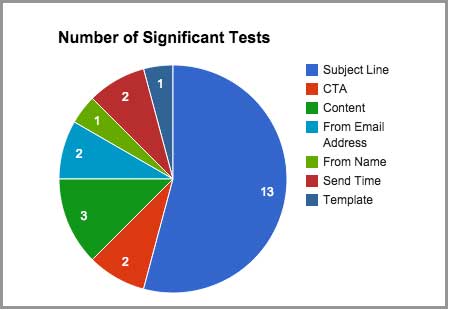

We’ve recorded a total of 82 email A/B tests to date. Of those 82, 27 have been statistically significant! That’s 30%. While this is a healthy average, there are easy ways to get more statistically significant results from your test: be fearless.

We’ve recorded a total of 82 email A/B tests to date. Of those 82, 27 have been statistically significant! That’s 30%. While this is a healthy average, there are easy ways to get more statistically significant results from your test: be fearless.

It’s easy to stick to subject lines, and tweaking words within a CTA button, however you will most likely find significant, valuable results if you test big changes. The Optimizely Academy has a great article on this.

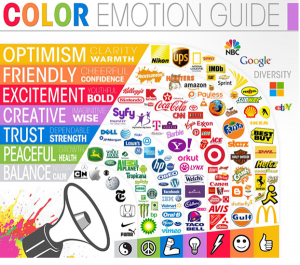

In 2015, we want to make our email experiments fearless, creative, and high impact. Some things we hope to learn this year: how humor affects open and click rates, the optimal medium for delivering different types of information, how to optimize our email experience for our customers worldwide, in a way that is amenable to their way of communicating and culture.

(338)