Here’s what you need to know about AI’s role in visual content creation and the two types of genAI tools for your creative needs.

(This is the third article in a series about how generative AI is impacting marketing. The first part is The rise of generative AI and the second is Working with text generators.)

“A picture is worth a thousand words” is a saying first attributed to Arthur Brisbane, an editor and public relations professional, during a 1911 banquet discussing journalism and publicity. Here we are over 100 years later, and it’s just as meaningful today.

The digital era and the proliferation of visual content platforms like websites, social media platforms and even the metaverse have increased the need for marketers to become visual content publishers. A campaign lives and dies by the strength of the creative, and brands pay top dollar to brilliant artists and experienced designers in order to make an impact on their audiences and stand out from their competition.

With visual generative AI tools, anyone can create great works of art and stunning visual content — no graphic design or art skills required. We are once again seeing a democratization of digital content creation, similar to how websites evolved from requiring coding and design skills to the development of tools like WordPress and Wix that enabled anyone to create a website. Agencies are scrambling to redefine their value, and savvy marketers are leveraging these new tools to help them save time and money.

Two types of visual genAI tools

Broadly speaking, you can divide visual genAI tools into two categories:

- Artwork and image/video generators.

- Design and image/video editing tools.

As we speak, the builders of these tools are working to merge these two functions, and I have found the benefit to marketing teams comes from a combination of both capabilities. Let’s unpack each one, explaining the differences and the top tools in each category.

Text-to-image and text-to-video generators

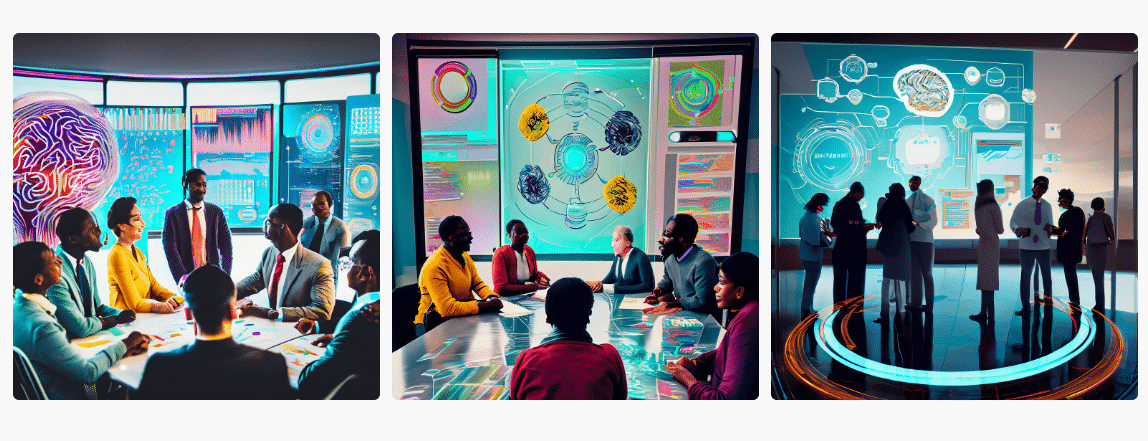

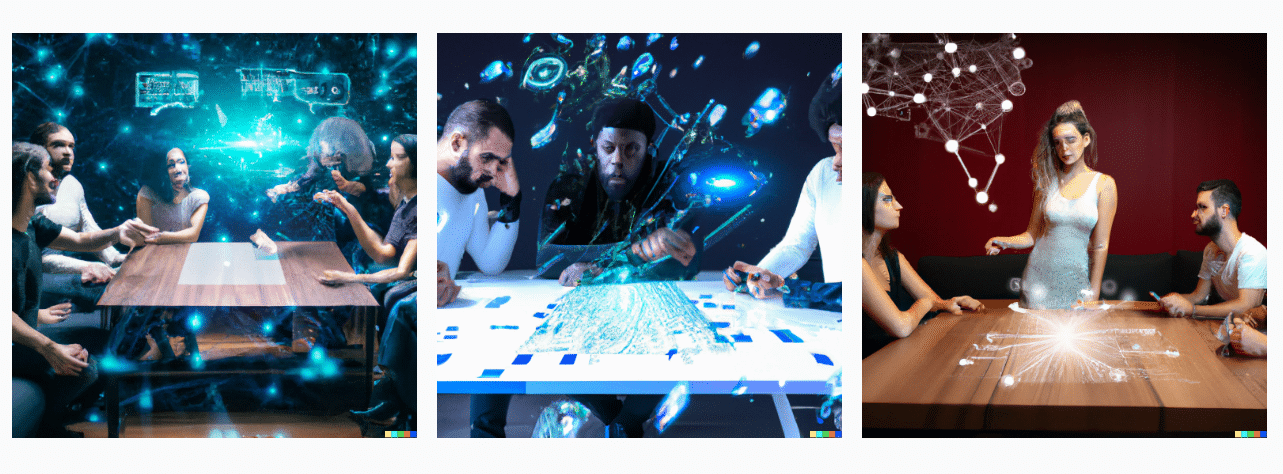

Simply put, these platforms allow users to enter a text prompt from which the learning language model (LLM) generates an image or a video. The output is based upon the data and materials that the LLM was trained on, therefore, each platform has a different set of generated output. The most popular image creation tools include Midjourney, OpenAI’s DALL-E and Adobe Firefly.

As you can see below, the same text prompt entered into these different tools will produce very different results. Each tool has different strengths and weaknesses and can provide marketing teams with a wide range of use cases. A few of the ways I have been using these tools include:

- Creating featured images for blog posts and social media posts.

- Crafting logos, mood boards, style guides and visuals to help communicate brand identity.

- Ideation and building visual assets to explain creative concepts.

- Develop quick comps for input and approval before developing the final output.

Historically, these artwork generators aren’t great at adding specific text overlays, and another tool would be needed to add text. However, as I’m writing this, DALL-E 3 is rolling out. It is a game changer when it comes to text.

As much as I love Midjourney, I’m once again forced to predict that Microsoft and OpenAI will most likely rule visual content generation in the same way they currently rule text-based content generation with ChatGPT. For more information on text-based content and genAI, look at my previous article in this series, A marketer’s guide to AI: Working with text generators.

But what about video, you might be asking? So far, video generation tools haven’t blown me away. Tools like Runway.ml can produce short videos from a text prompt and I’m certain these capabilities will improve over the coming months. Much more powerful and useful for marketers, in my opinion, are the AI video editing and design tools we’ll be discussing next.

Generative AI design and editing tools

The second broad category of genAI tools for visual content leverages AI to help design and edit outputs like comped images, animated images, presentations and videos.

Just to make my job even more difficult in explaining this stuff, several of these tools do include some sort of image or artwork generation feature. We can expect big players like Adobe, Microsoft and Google to continue to integrate these tools and capabilities. Recently, OpenAI announced that it had updated ChatGPT to include the use of voice prompts and the ability to “see” images. Microsoft has also started integrating DALL-E 3 and their AI design tool, Microsoft Designer, into the Bing search engine.

Without a doubt, the future will allow us to generate beautiful artwork and edit it as needed for our campaigns within one interface. However, today, my workflow has me jumping around to a handful of platforms to craft my final campaign content. I’m still saving hours and hours, along with loads of cash that would have been spent on paying designers and editors to do this work for me.

I generally will start with an image generation tool like Midjourney or DALL-E and then upload the generated image to one of my preferred design and editing tools. The tools that I use to edit and design my visual content include platforms that excel at specific asset types like presentations, social media posts, animated gifs and video shorts.

Understanding the final asset that you are looking to create can help you select the best tool for the job. I use Microsoft Designer for both static and animated images, along with GlossAI and PlayPlay for video output. There are also tools, like Canva, that offer many different types of output — from images and infographics to presentations.

As the tool landscape is changing dramatically day by day, I would recommend you start by searching for the top tools that use AI to generate the specific output you are looking for. As you experiment, you will find the set of tools that are most helpful for your specific task.

There are many marketing use cases for these types of design and editing tools, which can help marketers boost creativity and save time and money. Below are just a few of the obvious ones:

- Creating video shorts, chapters, summaries and snackables from long-form videos.

- Adding sub-titles, intros, outros, text, graphic elements and audio to video content.

- Designing beautiful presentations instantly.

- Editing an existing image by adding or replacing elements, adding text overlay, stickers, etc.

- Crafting compelling video shorts using static images and existing video snippets.

A word about copyright

Let me preface this by saying I am not a lawyer, and this is by no means legal advice. However, it’s important that I share a bit about the brewing issues around copyright infringement. It’s a complicated topic, but simply put, companies that are using some of these generated artworks are at risk of being sued.

It will be years before the dust settles, and there are no clear-cut laws regulating this stuff, so in the meantime, exercising caution is recommended. Both Microsoft and Adobe have announced that they will cover customers’ costs resulting from legal action, so these are likely among the best options for cautious brands fearing lawsuits.

Also, it’s important to remember that in the U.S. nothing created by AI can be copywritten. If you put it out there, anyone can use it.

Tutorials on using AI

The best way to become a genAI visual content master is by rolling up your sleeves and diving in. As part of this series of articles, I have crafted a series of hands-on video tutorials that will guide you through how to actually use this stuff. You can join the #AIMarketingRevolution Challenge on my YouTube channel, where I show you how to quickly compare and contrast visual output from top platforms like Midjourney, DALL-E and Adobe Firefly.

The post A marketer’s guide to AI: The new frontier of visual content appeared first on MarTech.

(8)