Every industry is plagued by myths, misunderstanding and half truths – and conversion optimization is no different.

This is especially true in any marketing-related field – partially because there are no universal practices and partially because content marketers are rewarded for producing highly shareable and linkable content (not always 100% accurate content).

In any case, some myths are more poisonous than others. They can create misunderstanding among practitioners and confusion for beginners. With the help of some of the top experts in conversion optimization, here is a list of the 7 most poisonous conversion optimization myths:

1. “CRO is a List of Tactics and Best Practices”

This may be the most pervasive myth in conversion optimization. This makes sense. It’s so easy (and effective) for a blogger to write a post of 101 conversion optimization tips or 150 A/B tests to run right now. Of course, these articles are bullshit. They make it seem like conversion optimization is a checklist, one you can run down, try everything, and get insane uplifts. Totally wrong.

Let’s say you have a list of 100 ‘proven’ tactics. Let’s venture to say they’re ‘proven’ by science or psychology. Where would you start? Would you implement them all at once. Then your website would look like a Christmas tree.

Some changes will work, some will be worse for your site. They’ll likely cancel things out, or possibly even make things worse. Without a rigorous and repeatable process, you’ll have no idea what had an impact – so you’ll miss out on the most important part of optimization: learning.

No Room For Spaghetti Testing

Even if you tried to test the 100 tactics one by one, you’ll find that you’re wasting time. The average A/B test takes ~4 weeks to run, so it’d take you 7.5 years to run them all one by one.

The key to optimization is having a good process to follow. If you have a good process, you can prioritize work and know which ‘tactics’ try and which to ignore. You would have a good indication of where the problems are because you did the research, the heavy lifting.

Conversion optimization – when done right – is a systematic, repeatable, teachable process.

What About Best Practices?

You’ll inevitably come across these tactics, tips and best practices blog posts, because they’re highly shareable and outline idyllic and easy to digest opportunities for people to lift conversion rates (and make more money).

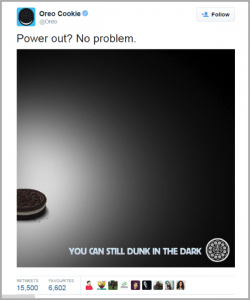

They’re based on “best practices,” which are where you should start – not where you should end up. And of course, best practices can fail, too.

The good news is, for all of the articles you read on best practices, there are some that are biting back. It’s as simple as debunking a few common ‘best practices,’ or discussing common tropes like social proof or the 3-click rule.

Just remember, results are always contextual. The goal is to figure out your specific customers and what will and won’t work on your specific website. Leave the 101 best practices articles to the amateurs.

2. “Split Testing = Conversion Rate Optimization”

Most people equate conversion rate optimization with an A/B split test. Winners, losers, and a whole lot of case studies around button color tests.

Optimization is really about validated learning. You’re essentially balancing an exploration/exploitation problem as you seek the optimal path to profit growth. As Tim Ash puts it, split testing is just a tiny part of the optimization process. Tim Ash is the CEO of SiteTuners, author of the bestselling book Landing Page Optimization, and chair of the international Conversion Conference event series, and here’s what he had to say on the topic:

Tim Ash:

Tim Ash:

“Testing is a common component of CRO. And I am glad that some online marketers have belatedly gotten the “testing religion,” as I call it. However, it is only a tiny part of optimization. Basically testing is there to validate the decisions that you have made, and to make sure that the business has not suffered as a result. But even if you are doing testing well (with proper processes, traffic sources, statistics, and methods for coming up with ideas), the focus should still not be exclusively on testing activities.

If you simply worry about testing velocity or other tactical outcomes you will miss the larger opportunity. Unless you see CRO as a strategic activity that has the potential to transform your whole business, you run the risk of it becoming simply a tactical activity that is a part of your online marketing mix. And that would be the biggest tragedy of all.”

Also, if you don’t have traffic for testing, you can still optimize. How? You can use things like:

- Heuristic analysis.

- User testing.

- Mouse tracking.

- User session replays.

- Talk to your customers or prospects.

- Site walkthroughs

- Optimize for site speed.

3. “If thine testing worketh not in the first months, thy site is not worthy to be optimized.”

Brian Massey, founder of Conversion Sciences and the author Your Customer Creation Equation: Unexpected Website Formulas of The Conversion Scientist, says that one of the biggest myths in optimization deals with the expectations of results. Often, companies will throw in the towel if results don’t appear immediately. Here’s what Brian had to say:

Brian Massey:

Brian Massey:

“Optimization is not a program. It’s not a strategy. It’s not a get-rich scheme. It doesn’t work as quickly as PPC nor as slowly as SEO. Your mileage may vary.

Most nascent website optimization projects die a quick death in the first months when tests fail to deliver results. This is unfortunate for the companies that end up walking away, and very good for their competition.

The conclusion of nervous executives is, “Optimization doesn’t work for us,” or “Our site is already awesome.” My answers are, “It will and it’s not,” in that order.

The problem is not that they’re doing testing wrong, or that their audience is somehow immune to testing. The problem is more likely that they chose the wrong things to test.

We offer an aggressive 180-day program of testing. Even with our big brains and lab coats, it takes a lot of work to figure out what to test in those early months. We expect to have inconclusive tests, even with our deep evaluation.

I say to the optimization champions in companies all over, be patient, diligent, determined. Push through those early inconclusive tests before giving up the fight. If you want to hedge your bets, hire one of the consultants on this list.”

Another related myth is that conversion optimization is widely understood and appreciated by businesses. Even though it’s been around long enough, that’s simply not the case. This lack of understanding leads to a lot of the problems Brian mentioned above. Here’s how Paul Rouke from PRWD put it:

Paul Rouke:

Paul Rouke:

“[Conversion optimization] should be widely understood and appreciated. It has been around long enough. It’s importance and influence on business growth should mean that business leaders, decision makers and stakeholders all truly understand and value conversion optimisation.

Unfortunately we are still nowhere near this being the case. Why is this poisonous? Over the next 5/10 years, as the maturity of the industry slowly matures, there is going to continue to be a huge amount of poorly planned and executed testing delivered either in-house or through agencies. All this is going to do is drive more misconceptions about the real art of conversion optimisation, such as “it’s just tweaking things” or “it just a tactic we need to use as part of our overall strategy”.

Someday we will get to the point where businesses truly understand the importance of optimisation to help evolve and grow their business. This day is still a long way away unfortunately. Education and enlightenment therefore still have a huge role to play in helping reach this day.”

4. “Test to validate opinions and hypotheses”

As humans, we’re all irrational. So a large part of optimization is trying to mitigate natural cognitive biases in order to reach a more objective business decision. Andrew Anderson has written a large amount on overcoming biases before, and has some great thoughts on this issue:

Andrew Anderson:

Andrew Anderson:

“By far the thing that I think is poisonous in the industry is the constant push for hypothesis and the tactics that lead to “better ones.” Fundamentally this leads people to think about and structure optimization in extremely inefficient ways and stops groups from the real core things that are the difference between mediocre results and great results.

The point of optimization is to maximize returns and to increase efficiency, and fundamentally focusing on validating an opinion of anyone, be it the optimizer or anyone else is going against that purpose. Even worse it stops people from really exploring or understanding optimization beyond that scope.

The only thing that is possible from overly focusing on opinion is to limit the scope of what you test and to allow the limitations of your imagination to control the maximum outcome of your testing program. It is scary and great how often things that people think should never win do and how rarely things that everyone loves are the best option, yet so many experts tell you to only focus on what they want you to test or what their proven program for creating hypothesis tells you to test.”

In other words, you don’t always have to be right. Sometimes – much of the time – what you thought would work didn’t and vice versa. As Andrew explains, being wrong is where the learning occurs, so don’t be afraid of being wrong:

Andrew Anderson:

Andrew Anderson:

“Being wrong is where all the money is, being right is boring and teaches us little. Explore, enjoy being wrong, and there is very little limit to what you can achieve.”

5. “When In Doubt, Copy The Competition”

The internet is brimming with conversion optimization case studies, so it’s tempting to fall into the trap of stealing others’ test ideas and creative efforts.

Don’t.

First off, be skeptical of case studies. Most don’t supply full numbers, so there’s no way of analyzing the statistical rigor of the test. My guess is these case studies are littered with low sample sizes and false positives. That’s just one of the reasons most CRO case studies are BS.

The other is that, even if the test was based on good statistics, you’re ignoring context. Your competition has different traffic sources, different branding, different customers. One thing that works for them might tank your conversion rates. Here’s what Andrew Anderson wrote about contextuality of A/B test results:

“In order to be the best alternative, you need context of the site, the resources, the upkeep and the measure of effectiveness against each other. Even is something is better, without insight into what other alternatives would do it is simply replicating the worst biases that plague the human mind.”

Stephen Pavlovich from Conversion.com explains really well why you shouldn’t just copy another company’s creative:

Stephen Pavlovich:

Stephen Pavlovich:

“It’s tempting to copy what’s working for other people. Our nature as optimisers means we’re looking for ways to have impact with the minimum effort. But copying winning creative from case studies – or stealing design and copy from competitors – will often fail.

Even if the case studies are based on sound statistical analysis (unlikely), and even if your competitors have a well optimised design (also unlikely), you’d still be copying the end result and ignoring the process that created it.

We once created a new landing page for a SaaS company, which got a huge amount of press. It doubled their conversion rate from visit to paying customer. But then the copycats came – they copied the page structure and style, and sometimes even parts of the copy and HTML. Unsurprisingly, this didn’t work out for them. In fact, one even complained to me that the original result must have been flawed.

But this is the same behaviour as the cargo cults of Melanesia. After WWII, some South Pacific islanders copied the uniform and actions of the military personnel who had been based there, expecting it to result in planes appearing full of cargo.

At its core, conversion optimisation is a simple process: find out why people aren’t converting, then fix it. By copying others, you ignore the first part and are less likely to succeed at the second.”

Finally, if you’re spending your time copying competitors (and case studies from WhichTestWon) what you’re not spending your time on is validated learning, exploration, or customer understanding.

On the same note, stop worry about, “what’s a good conversion rate?” What would you do with that information if you had it? Whether you’re under or over, you’d still (hopefully) be working on your specific site. Therefore worrying about industry averages and other people’s results are simply a waste of time.

Peep summed it up well in a recent post:

Peep Laja:

Peep Laja:

“Using other people’s A/B test results has been popularized by sites like WhichTestWon, and blog posts boasting about ridiculous uplifts (most of those tests actually NOT run correctly, stopped too soon – be skeptical of any case study without absolute numbers published). It’s an attractive dream, I get it – I can copy other people’s wins without doing the hard work. But.

The thing is – other people’s A/B tests results are useless. They’re as useful as other people’s hot dog eating competition results. Perhaps interesting to know, but offer no value.

Why? Because websites are highly contextual.”

6. “Understand statistics? Nah, I’ll just wait until my tool says the test is done.”

While tools are getting more robust and trending towards a more intuitive understanding of running a test, it’s still crucial to learn some basic statistics.

As for why you need to know statistics to run A/B tests, Matt Gershoff put it best when he wrote, “how can you make cheese when you don’t know where the milk comes from?!”

Knowing some basic statistics allows you to avoid type I and type II errors (false positives and false negatives), and it allows you to avoid imaginary lifts. It would take a few articles to write everything you need to know about stats, but here are some heuristics to follow while running tests:

- Test for full weeks.

- Test for two business cycles.

- Make sure your sample size is large enough (use a calculator before you start the test).

- Keep in mind confounding variables and external factors (holidays, etc)

- Set a fixed horizon and sample size for your test before you run it.

- You can’t ‘spot a trend,’ regression to mean will occur. Wait until the test ends to call it.

7. “CRO is the same as SEO, social media, email etc…If you have budget you can buy some, if you have less budget, you just cut down a little”

It may seem easier to cut down on conversion rate optimization costs because, essentially, it looks like you’re freezing your ROI instead of cutting traffic/acquisition/revenue. However, optimization is about more than a 10% in the funnel. It’s about decision optimization, learning where and how to change or take away elements to improve your UX and boost RPV. Here’s what Ton Wesseling from Testing.Agency has to say on the topic:

Ton Wesseling:

Ton Wesseling:

“In fact it’s even worse. It’s always hard to cut down on buying traffic. It has immediate impact on your business. Cutting down on CRO looks like freezing your conversion rate, not raising it for some time. That’s less painful than immediate lower traffic.

This is so wrong. CRO is a way of living, a way of working – continuously experimenting is what you need to stand out and grow. Lowering on CRO is like digging out the poles under your home (yes we have those in the Netherlands). Don’t do that. Become independent on traffic buying for surviving ASAP, and never stop experimenting.”

Conclusion

For the integrity of an industry, it’s important to make note of destructive myths and mistruths. That way, those beginning to learn will have a clearer (and less confusing path), and businesses new to optimization will not get discouraged with disappointing (non-existent) results.

Here’s the thing, though: there are many more myths that we probably missed. What are some destructive ones that you have come across in your work?

Digital & Social Articles on Business 2 Community(85)