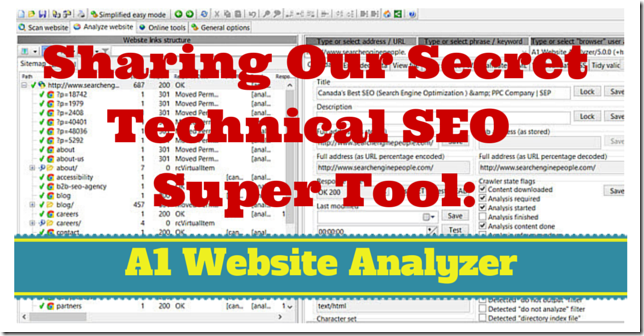

A1 Website Analyzer is a powerful SEO audit website crawler that installs on your computer like regular software. It can extract all technical and on-page information you need to perform a complete audit. It doesn’t require any running subscription to keep functioning. At SEP it’s our go-to tool when the going gets tough.

SEO Spider Crawlers

One of the most daunting but also most important tasks when you do an SEO audit is to gather information from as many pages as you can. The whole web site, if possible. Are there links that are 404 Not Found? Where redirects are used, are they of the type 301 Permanent Redirect? Are there redirect chains? Is Google Analytics installed on every page?

The larger a site is the less realistic it becomes to do this manually, by hand, going page to page. Most SEO’s use a software crawler: a bot, or spider, that will start at a page, extract all its links, visit each of them, and repeat the process until the whole web site has been crawled or the process is manually stopped.

There are a slew of these bots out there ranging from university projects to large projects, some with commercial support. The vast majority of these bots index web pages — after which you can do something with them. You can build your own search engine or you can become a spammer spinning other people’s content.

For an SEO audit you’re looking for a tool that gives you as much technical information as you need, including some information on on-page elements. What’s the <title>? Are there <h>eading tags being used? Is there duplicate content?

There are only a few serious, dedicated SEO crawlers that make the mark: Screaming Frog SEO Spider Tool ($150/year), A1 Website Analyzer ($69 one-time purchase), and Win Web Crawler ($99 one-time purchase). Crawlers like Xenu Link Sleuth or LinkCrawler don’t come into play; these are dedicated (broken) link checkers.

Our pick of the litter has been A1 Website Analyzer. It was first to market with the functionality we needed at the time we needed it. Screaming Frog came on the scene later while Win Web Crawler, the oldest of the 3, was still more geared towards sucking up web pages and their content vs extracting SEO data.

A1 Website Analyzer

I always feel like I’ve just scratched the surface of the program; whenever I ask the developer for help with a crawling problem or suggest a feature it turns out what I need has already been added to the program.

That said, these are some of the things A1 Website Analyzer can do:

- Precisely control which parts of a website should by analyzed with “limit to” and “exclude” option you can set using URL patterns or using + and – buttons

- Set user agent string

- Set type of request: GET for the whole page or just a HEAD request to only get the page info (much faster; great for recrawls and verification)

- Control user agent strings amount of simultaneous connections, computer threads, time between requests, and much more, so even troublesome websites can be fully crawled

- Search every page for custom text and code. Regular expressions are supported. (Usage: is Google Analytics installed on all pages? Which pages miss Google Tag Manager? Which pages use that outdated piece of code the client looks to remove?)

- See length in pixels and characters for both page titles and descriptions

- Visually identify similar content

- Get a rough word count of the on-page text: easily identify thin content

- See redirects, type of redirect, the redirect target, and the redirect path to that target

- List pages marked canonical

- Find images without alt attribute text

- Collect the anchor texts used for pages

- See which pages link to this page — or which pages use this page

- Performs internal PageRank calculations: which are your most important pages according to your site’s link structure? (Takes canonical, nofollow, etc. into account). Columns include link-in count, link-out count, etc.

- How many clicks away from home is this page

- Can identify HTML and CSS errors as well as do spell checking

- Is this page indexed

- Keyword density analysis: what is this page optimized for

- Can run on schedule using WIndows’ built-in scheduler

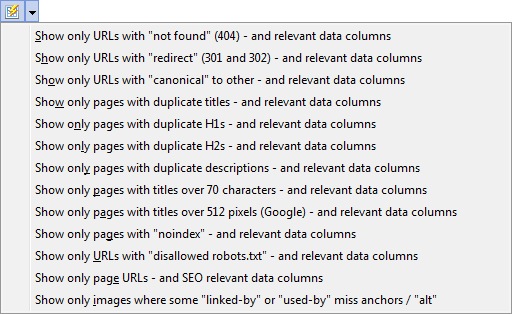

- Select any or all data column, with or without filters, and export to CSV and HTML. This is my preferred way of looking at the data but the built-in filtering is great to quickly do things like only show broken URLs that respond with 404 “not found” and from where they are linked.

- Verify external links (find those broken outbounds!)

- Include alternate domain names for indexing (e.g. http + https variations) as well as alternative scanning paths

- etc. etc. etc.

Obviously, once exported to CSV and opened in Excel with its filters, VLOOKUP’s, and sorting, the information can be sliced, diced, and combined in any which way.

How To Use: Quick Start

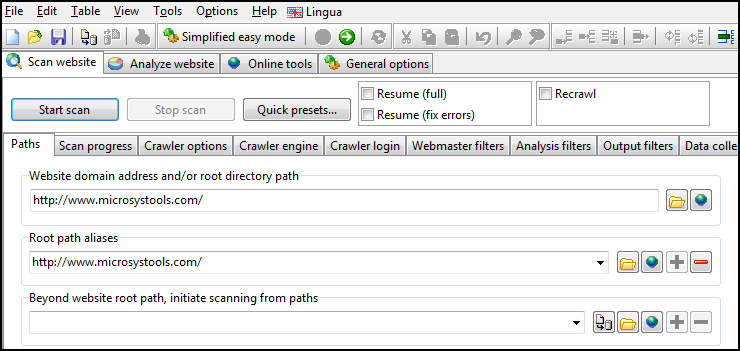

When you first start A1 Website Analyzer, it is already configured to collect the most interesting data – and also in a way that works with most websites. For instance, the amount of simultaneous connections is set to a low number to minimize the chance of any problem – e.g. the webserver throttling traffic.

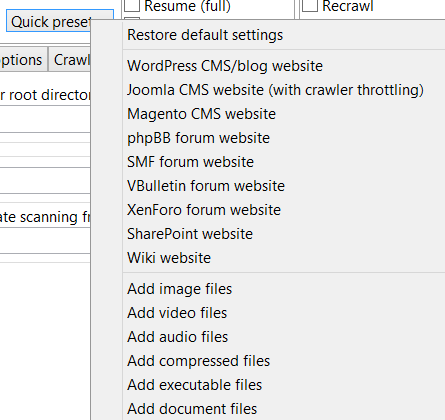

You can also use the “Quick presets” button to quickly adjust the underlying settings – e.g. if you are particular interested in specific kinds of files:

To get started using the tool, you will need to crawl a website. To do so, simply enter the address of the domain and press the “Start scan” button.

When done, you are taken to the “Analyze website” tab where you can view all the data.

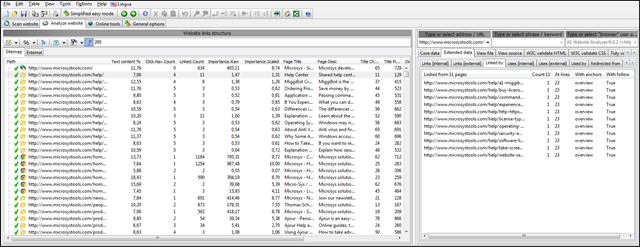

To the left we can see some SEO related columns for all pages that respond with “200 – found”. To the right, we can dive into various details for the selected URL.

The data you see to the left is determined by which data columns ![]() you have chosen should be visible and what “quick filters”

you have chosen should be visible and what “quick filters” ![]() you are using. You can also choose between viewing the website URLs as a “tree” or “list”

you are using. You can also choose between viewing the website URLs as a “tree” or “list” ![]() and with/without URL percentage encoding

and with/without URL percentage encoding ![]() .

.

There is also a “reports” button with instant report configurations. I prefer to go simply show everything and export to Excel from where I use a combination of filters.

Using these, you can quickly generate different report views which can be exported to CSV (Excel) and HTML.

My Preferred Settings

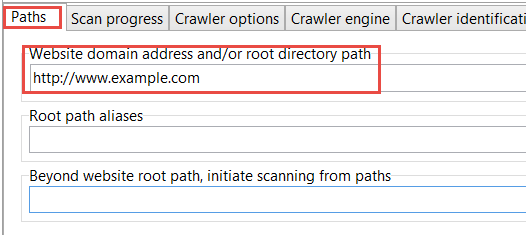

I copy & paste the domain name in the path field. It’s rare that a site is set up funky and needs additional paths here — but it happens.

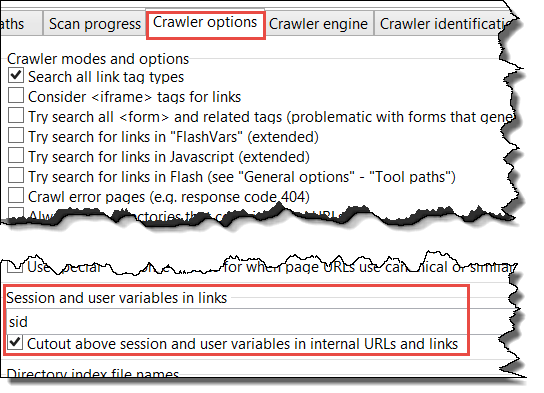

Crawler Options are good to go by default. Less and less often I have to work with a session ID or other query parameters that I need ignored.

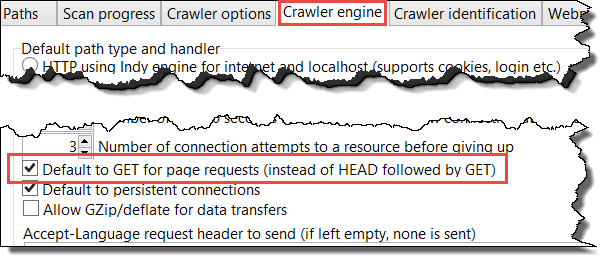

In Crawler Engine I likewise leave everything as is — unless doing a check on a list of URL’s in which case the GET default should be unchecked.

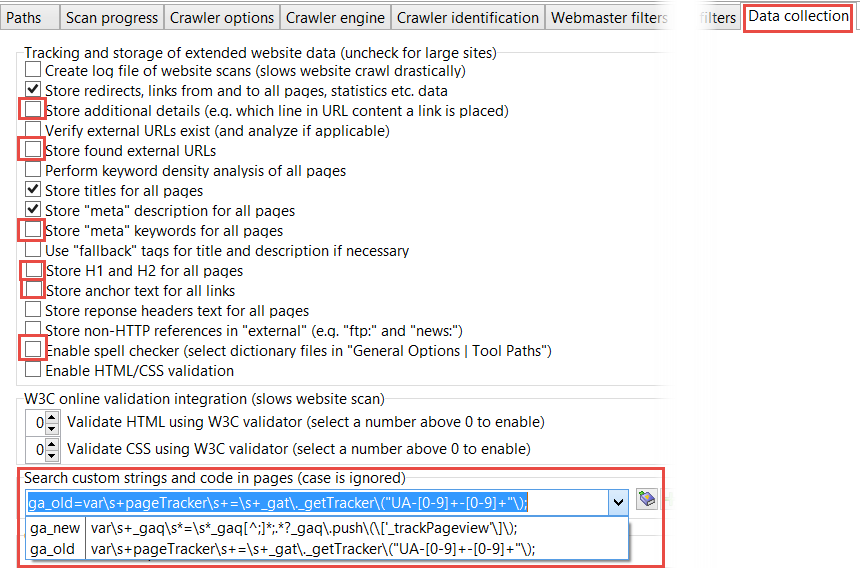

Data Collection is my most important tab. I uncheck a number of options. Anchor text I only leave checked when I’m doing something very specific.

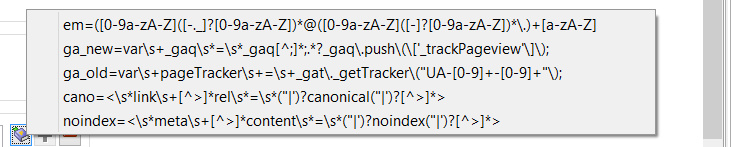

The searching for custom string is priceless. Click on the help icon ![]() next to the field to pick from a number of pre-built regular expressions.

next to the field to pick from a number of pre-built regular expressions.

You can add your own or search the regular way. You can add as many searches as you like.

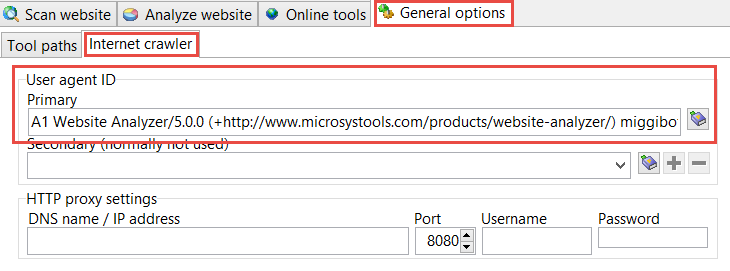

Finally, sometimes it’s interesting to see what happens when you change the user agent:

Over the coming months the developer of A1 Website Analyzer will publish a number of how to‘s. In the meantime you can have a look at his Complete Guide on Website Audits with A1 Website Analyzer.

Now Read

* With photo work by BadgerGravling, CJ Isherwood

My paid passion at Search Engine People sees me applying my passions and knowledge to a wide array of problems, ones I usually experience as challenges. People who know me know I love coffee.

A1 Website Analyzer: SEO Audit Tool & Website Crawler

The post A1 Website Analyzer: SEO Audit Tool & Website Crawler appeared first on Search Engine People Blog.

(347)