— May 21, 2018

We often get asked about the effectiveness of A/B testing. That’s not a surprise. We’re proud advocates of the ‘test everything’ approach here at Swrve, and are always delighted at opportunities to show just how beneficial it can be for your business – if done right!

Of course like most questions which ask ‘how much can x do something to y’’, it is often hard to give a definitive answer; after all, every business has unique variables that influence results. In addition, there’s a difference between a single test and an extended program of related tests. The latter is always likely to deliver more significant benefit. But at the same time, there’s nothing wrong with sharing real world examples that demonstrate just how much is at stake – and how much the business can benefit – from simply testing key aspects of the business and establishing which of multiple variants works best when it comes to the metrics you care about.

The rest of this article looks at one such example.

About A/B Testing

Before we go further, it’s worth making sure we all understand exactly what A/B testing is. Put simply, it’s serving two or more variants of the user experience to otherwise identical audiences, and then establishing, through data, which is most effective according to agreed criteria e.g. which version delivered the most conversions. This enables businesses to make big decisions on the back of actual user data, rather than the opinion of the most persuasive person in the room or ‘gut instinct’.

Pretty much any aspect of the user experience can be A/B tested, but creating meaningful tests involves more than simply testing little things like button colors. Although the little things are important, we always advise our customers to think about the bigger picture if they really want to make a difference. And when it comes to making a difference to revenue this often means testing the ‘key variables’. The following is an example of a customer doing just that.

A Customer Success Story

A world-renowned publisher was struggling to deliver the revenues they desired from their app. But instead of doing something drastic, like reducing the price or changing strategy entirely, they decided to A/B test their paywall (the number of articles a user can read before having to subscribe) to see if changing that number made a significant difference to the number of subscriptions they were receiving on mobile.

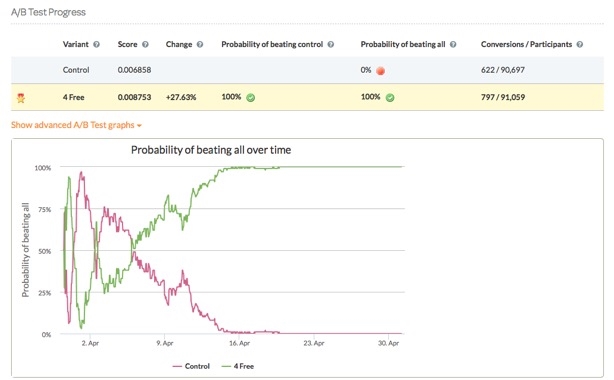

The test was simple, but well defined and carefully thought out. The normal paywall allowed readers a limit of 10 articles before they had to subscribe to continue reading. For the purposes of the A/B test, the audience was split 50/50 and delivered an alternative paywall limiting readers to just 4 free articles. The two variants were delivered to over ninety thousand participants each. It was a brave move that paid off handsomely.

The results of the test were surprising – readers were 27% more likely to convert with a 4 article paywall! Further analysis showed that, on average, the users engaging with the 4 article limit brought in 20% more revenue over the month that the campaign was running.

Let’s take a deeper look at the graphs below which compare the performances of the two paywalls. The control group (in pink) shows the usual paywall of 10 free articles, and the test group (in green) refers to the paywall of 4 free articles. The results are conclusive: nearly every day, Average Revenue Per User (ARPU) from the test group is higher than the control group. And the probability of the test group beating the control over time was 100%. Need we say more? The normal paywall of 10 articles was completely blown away.

And so we return to the question which started this article: ‘how effective is A/B testing?’ Well, for this example, we can give you an answer! A subscription to this particular publication costs $ 90 a year and customers have an estimated Lifetime Value (LTV) of $ 180 (i.e. 2 years). Without the paywall test, revenue for the publisher would have stood at $ 1.22m, but as a result of the test, revenue is projected to be boosted to $ 1.58m – a difference of $ 350k.

It’s safe to say that the answer to the question is: A/B testing can be hugely effective – and significantly increase revenue!

Digital & Social Articles on Business 2 Community

(112)