There’s a philosophical statistics debate in the optimization in the world: Bayesian vs Frequentist.

This is not a new debate; Thomas Bayes wrote “An Essay towards solving a Problem in the Doctrine of Chances” in 1763, and it’s been an academic argument ever since.

Recently, the issue has become relevant in the CRO world – especially with the announcement that VWO will be using Bayesian decisions (Google Experiments also uses Thompson sampling, which is informed by a Bayesian perspective).

So what the hell does Bayesian statistics mean for a/b testing? First, let’s summarize Bayesian and Frequentist approaches, and what the difference between them is.

Note: I’m not going to go too in-depth in the philosophical debate between the two approaches or the intricacies of Bayes’ Theorem. I’ve listed some further reading at the bottom of each section if you’re interested in learning more.

The Quick and Dirty Difference Between Frequentist and Bayesian Statistics

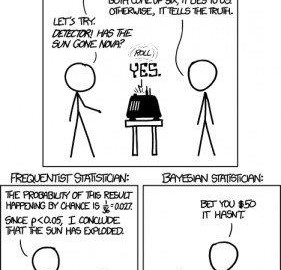

Image Source: lots of controversy around this cartoon

Image Source: lots of controversy around this cartoon

The Frequentist Approach

You’re probably familiar with the Frequentist approach to testing. It’s the model of statistics taught in most core-requirement college classes, and it’s the approach most often used by a/b testing software.

Basically, using a Frequentist method means making predictions on underlying truths of the experiment using only data from the current experiment.

As Leonid Pekelis wrote in an Optimizely article, “Frequentist arguments are more counter-factual in nature, and resemble the type of logic that lawyers use in court. Most of us learn frequentist statistics in entry-level statistics courses. A t-test, where we ask, “Is this variation different from the control?” is a basic building block of this approach.”

The Bayesian Approach

According to the Leonid Pekelis, “Bayesian statistics take a more bottom-up approach to data analysis. This means that past knowledge of similar experiments is encoded into a statistical device known as a prior, and this prior is combined with current experiment data to make a conclusion on the test at hand.”

So, the biggest distinction is that Bayesian probability specifies that there is some prior probability.

The Bayesian approach goes something like this (summarized from this discussion):

- Define the prior distribution that incorporates your subjective beliefs about a parameter. The prior can be uninformative or informative.

- Gather data.

- Update your prior distribution with the data using Bayes’ theorem (though you can have Bayesian methods without explicit use of Bayes’ rule – see non-parametric Bayesian) to obtain a posterior distribution. The posterior distribution is a probability distribution that represents your updated beliefs about the parameter after having seen the data.

- Analyze the posterior distribution and summarize it (mean, median, sd, quantiles,…).

To explain Bayes reasoning in relation to conversion rates, Chris Stucchio gives the example of a hypothetical startup, BeerBnB. His initial marketing efforts (ads in bar bathrooms) drew 794 unique visitors, 12 of whom created an account giving the effort a 1.5% conversion rate. Suppose the company can reach 10,000 visitors via toilet ads around the city, how many people can we expect to sign up? About 150.

Bayesian Formula (Image Source)

Bayesian Formula (Image Source)

Another example was something I found when reading Lean Analytics. There’s a case study about a restaurant, Solare. They know that if by 5 p.m. there are 50 reservations, then they can predict there will be around 250 covers for the night. This is a prior and can be updated with new sets of data.

Or as Boundless Rationality wrote, “A fundamental aspect of Bayesian inference is updating your beliefs in light of new evidence. Essentially, you start out with a prior belief and then update it in light of new evidence. An important aspect of this prior belief is your degree of confidence in it.”

Matt Gershoff, CEO of Conductrics, explained the difference between the two as such:

Matt Gershoff:

Matt Gershoff:

“The difference is that, in the Bayesian approach, the parameters that we are trying to estimate, are treated as random variables. In the frequentist approach, they are a fixed. Random variables are governed by their parameters (mean, variance, etc), and distributions (Gaussian, Poisson, binomial, etc). The prior is just the prior belief about these parameters. In this way, we can think of the Bayesian approach as treating probabilities as degrees of belief, rather than as frequencies generated by some unknown process”

In summary, the difference is that in the Bayesian view, a probability is assigned to a hypothesis. In the frequentist view, a hypothesis is tested without being assigned a probability.

So, why the controversy?

According to Andrew Anderson from Malwarebytes: cognitive dissonance.

Andrew Anderson:

Andrew Anderson:

“People have a need to validate whatever approach they are using and are threatened when anyone suggests that they are being inefficient or using tools completely wrong. The math that is involved is usually one of the only pieces that most optimizers get complete control over and as such they over value their own opinions and fight back against counter arguments.”

He says it’s much easier to debate minute tasks and equations than it is to discuss the testing discipline and the whole role of optimization in an organization.

Another inflammatory illustration based on the heated debate (Image Source)

Another inflammatory illustration based on the heated debate (Image Source)

Dr. Rob Balon, CEO of The Benchmark Company, agrees:

Dr. Rob Balon:

Dr. Rob Balon:

“The argument in the academic community is mostly esoteric tail wagging anyway. In truth most analysts out of the ivory tower don’t care that much, if at all, about Bayesian vs. Frequentist.”

Though, Andrew Gelman was cited in a New York Times article defending Bayesian methods as a sort of double-check on spurious results. As an example, he re-evaluated a study using Bayesian statistics. The study had concluded that women who were ovulating were 20 percent more likely to vote for President Obama in 2012 than those who were not. Andrew added in data showing that people rarely change their voting preference during an election cycle – even during a menstrual cycle. Adding this info, the study’s statistical significance disappeared.

In my research, it’s clear there’s a large divide based on the philosophy of each approach. In essence, they tackle the same problems in slightly difference ways.

What Does This Have to Do With A/B Testing?

“A Bayesian is one who, vaguely expecting a horse, and catching a glimpse of a donkey, strongly believes he has seen a mule.”

Though Rob Balon referred to the debate as mostly ‘esoteric tail-wagging’ (Matt Gershoff used the term ‘statistical theater’), there are business implications when it comes to a/b testing. Everyone wants faster and more accurate results that are easier to understand and communicate, and that’s what both methods attempt to do.

Since VWO announced their change to Bayesian decisions, there has been interest in the mathematical methods behind the tools. There has also been a lot of misunderstanding about this, but mostly stems from a few common questions. Which is more accurate? Does it matter which one I use?

According to Chris Stucchio of VWO, “One is mathematical – it’s the difference between “proving” a scientific hypothesis and making a business decision. There are many cases where the statistics strongly support “choose B” in order to make money, but only weakly support “B is the best is a true statement”. The example I gave in the call is probably the simplest – B has a 50% chance of beating A by a lot (say B is 15% better) and a 50% chance of being approximately the same (say 0.25% worse to 0.25% better). In that case, it’s a great *business decision* to choose B – maybe you win something, maybe you lose nothing.

The other reason is about communication. Frequentist statistics are intuitively backwards and confuse the heck out of me. Studies have been done, and they show that most people (read: 80% or more) totally misinterpret frequentist stats, and often times they wrongly interpret them as bayesian probabilities. Given that, why not just give bayesian probabilities (which most people understand with little difficulty) to begin with?”

Though, as Matt Gershoff explains: “Often, and I think this is a massive hole in the CRO thinking, is that we are trying to ESTIMATE the parameters for a given model (think targeting) in some rational way.”

He continues:

Matt Gershoff:

“The frequentist approach is a more risk averse approach and asks, “Hey, given all possible data sets that I might possibly see, what parameter settings are in some sense ‘best?’”

So the data is the random variable that we take expectations over. In the Bayesian case, it is, as mentioned above, the parameter(s) that is the random variable, and we then say ‘Hey, given this data, what is the best parameter setting, which can be thought of as a weighted average based on the prior values.”

Does it Matter Which You Use?

Some say yes, and some say no. Like almost everything, the answer is complicated and has proponents on both sides. Let’s start with the pro-bayesian argument…

In Defense of Bayesian Decisions

Lyst actually wrote an article last year about using Bayesian decisions. According to them, ”We think this helps us avoid some common pitfalls of statistical testing and makes our analysis easier to understand and communicate to non-technical audiences.”

They say they prefer Bayesian methods for two reasons:

- Their end result is a probability distribution, rather than a point estimate. “Instead of having to think in terms of p-values, we can think directly in terms of the distribution of possible effects of our treatment…This makes it much easier to understand and communicate the results of the analysis.”

- Using an informative prior allows them to alleviate many of the issues that plague classical significance testing (they cite repeated testing and low base rate problem – though Evan Miller disputed the latter argument on this Hacker News thread)

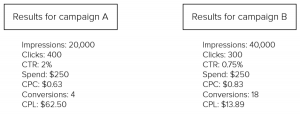

The also offered the following visuals, where they drew two samples from a Bernoulli distribution (yes/no, tails/heads), computed the p parameter (probability of heads) estimates for each sample, and then took their difference:

The article is a solid argument in favor of using a Bayesian method (they have a calculator you can use too), but they do leave with a caveat:

“It is worth noting that there is nothing magical about Bayesian methods. The advantages described above are entirely due to using an informative prior. If instead we used a flat (or uninformative) prior—where every possible value of our parameters is equally likely—all the problems would come back.”

Chris Stucchio explains some of the reasons for VWO using Bayesian decisions:

“In my view, this matters for primarily two reasons:The first is understanding. I have a much easier time understanding what a Bayesian result means than a frequentist result, and a number of studies show I’m not alone. Most people – including practitioners of statistical methodology – significantly misunderstand what frequentist results mean.

This has actually been studied in pedagogical circles; approximately 100% of psychology students and 80% of statistical methodology professors don’t understand frequentist statistics.

The second reason is computationa. Frequentist methods are popular in part because computing them is easy. Our old frequentist methods can be computed in microseconds using PHP, while our new Bayesian methods take minutes on a 64 core compute cluster. In historical times (read: 1990) our bayesian methodology would probably not be possible at all, at least on the scale we are doing it.”

But some disagree…

So there’s a good amount of support for Bayesian methods. While there aren’t many anti-Bayesians, there are a few Frequentists and people that just generally think there are more important things to worry about.

Andrew Anderson, for instance, says that for 99% of users, it doesn’t really matter.

Andrew Anderson:

“They are still going to get essentially the same answer, and for a large majority of them, put too much faith confidence, and not understand the assumptions in the model. In those cases, Frequentist is easier to use and they might as well cut down on mental cost of trying to figure out priors and the such.”

For the groups that have the ability to model priors and understand the difference in the answers that Bayesian gives versus frequentist approaches, Bayesian is usually better, though can actually be worse on small data sets. Both are equally impacted by variance though Bayesian approaches tend to handle biased population distribution better as they adapt better than Gaussian frequentist approaches.

That being said, almost all problems with a/b testing do not fall on how confidence is measured but instead in what they are choosing to compare and opinion validation versus exploration and exploitation. Moving the conversation away from simplistic confidence measures and towards things like multi-armed bandit thinking is far more valuable than worrying too much about how confidence is decided on. Honestly most groups would be far better off not calculating confidence at all.”

Dr. Rob Balon agrees, saying the Bayesian vs Frequentist argument is really not that relevant to A/B testing:

Dr. Rob Balon:

“Probability statistics are generally not used to any great extent in subsequent analysis. The Bayesian-Frequentist argument is more applicable regarding the choice of the variables to be tested in the A/B paradigm but even there most AB testers violate the hell out of research hypotheses, probability and confidence intervals.”

Tools and Methods

Most tools use Frequentist methods – though as mentioned above, VWO will be using Bayesian decisions. Google Experiments also uses Thompson sampling, which is informed by a Bayesian perspective.

Optimizely’s Stats Engine is based on Wald’s sequential test. This is the sequential version of Pearson-Neyman hypothesis testing approach, so this is a frequentist approach.

Conductrics’ next release will blend together ideas from empirical Bayes, with targeting, to improve the efficiency of its optimization engine.

Though, Andrew Anderson doesn’t think we should spend much time worrying about the methods behind it. As he said about tools advertising different methods as features:

Andrew Anderson:

“This is why tools constantly spout this feature and focus so much time and improving their stats engines, despite the fact that it provides close to zero value to most or all of their users. Most people view testing as a way to push their existing discipline, and as such they can’t have anyone question any part of discipline or else their entire house of cards comes crashing down.”

Conclusion

Though you could dig forever and find strong arguments for and against each side, what it comes down to is that we’re solving the same problem in two different ways.

I like the analogy that Optimizely gave using bridges: “Just like a suspension and arch bridges both successfully get cars across a gap, both Bayesian and Frequentist statistical methods provide to an answer to the question: which variation performed best in an A/B test?”

Andrew Anderson also had a fun way of looking at it: “In many cases this debate is the same as arguing the style of the screen door on a submarine. It’s a fun argument that will change how things look, but the very act of having it means that you are drowning.”

Finally, you can mess up using either method in testing. As the New York Times article said, “Bayesian statistics, in short, can’t save us from bad science.”

Digital & Social Articles on Business 2 Community

(453)