There are times when we overcompensate for weak SEO by getting too much into keyword research. While keywords are undoubtedly important, there are certain physical elements which can determine the success of a search engine optimization campaign.

On scrutinizing further, we can consider website structure as one important SEO metric— mostly overlooked by even the bigger names in the industry. In this post, we will be talking about 5 specific ways how website structure can have an impact on Search Engine Optimization— regardless of the search engine. Although website structure often falls to wayside, it does have hard-hitting repercussions— especially if we are considering long-term SEO goals.

That said, before delving into the nooks and crannies of website structure we need to clearly understand the concepts and necessities of the same. While the structural aspect of a website largely differs from the concept of designing, it originally stresses upon how the pages for a specific website are interlinked in general. Be it organizing the same for Google’s Spiders to crawl or for users to navigate seamlessly— website structure is of paramount importance when it comes to SEO campaigns.

Here are some of the SEO metrics which are affected:

Understanding Site Crawlability

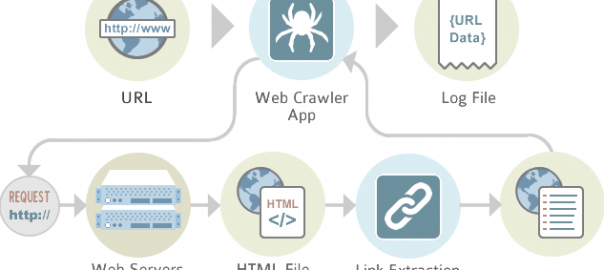

If we are looking to get rid of indexing issues— crawlability needs to be looked at— rather closely. It is known that if a robot comes across a dead end while crawling a website— the SEO gets impacted— negatively.

Site crawlability, in simpler terms, refers to the ability of a search engine or its robots to meander through the website— subpages and specific topics to be precise— mainly for completely understanding the website. The robots expect a properly constructed website to be able to cater to myriad user requirements— allowing them to navigate seamlessly from one page to the other.

When it comes to structuring the website, we must avoid dead ends— like pages which do not navigate anywhere. One way to improve site crawlability is to add internal links for every page. This in turn creates a bridge of sorts— offering related posts and breadcrumbs for the robots to look at and follow.

Some of the best examples of perfectly crawlable websites include streaming clients like 123movies, Hotstar, Netflix, Hulu and a few other recognizable ones. The best thing about these websites is that every page returns a new address or page— specifically within the same website. Streaming websites are therefore perfectly crawlable and enjoy the cream of indexing benefits.

Attending to Internal Links

If a user visits any website— he or she must be able to navigate across the pages with ease. This is usually made possible via internal links. As an advice, a user must take a minimum of three clicks for landing on a select page. However, we must segregate and categorize the links accordingly without wrecking the stability of a website.

Internal linking has its set of advantages when it comes to search engine optimization:

- Upon adding internal links, search engines can easily find related pages— via keyword-specific anchors.

- This approach reduces the page depth.

- Content access becomes way easier if the pages are interlinked— offering decent user experience (something which we would be talking about in the next section)

- Lastly, when it comes to search engine result pages or SERPs— pages which are interlinked are given precedence, in terms of ranking benefits.

We have seen people linking back to content which is older— while drafting newer posts. The best approach, however, would be to close down the loop by doing the opposite as well i.e. linking back to newer pages by updating older pages. This way internal links complement each other.

Valuing User Experience

In the era of RankBrain algorithm, user engagement and user experience are hugely important. This actually gives us enough reasons for valuing website structure, on the whole. We cannot expect visitors to stay on our page for longer periods if the website lacks quality, interlinks, proper navigation and a host of other important attributes.

A poorly structured website repels the visitor which will surely have massive negative impacts on an existing SEO strategy. In addition to that, there are several metrics associated with user experience against which Google evaluates the efficacy of a website. Be it the click through rate or the effective bounce rate— Google can easily understand whether users are finding a particular website useful or not. Time spent on a given website is also a great indication of the fact that visitors are resonating with the content.

If these statistics are in our favor, Google will surely consider the same while evaluating rankings for search results. In simpler words, we must look to do certain things for instantly enhancing the overall user experience. Firstly, we can align necessary click throughs based on expectations. The second step could be making information available in the best possible manner. Finally, it all pans down to the navigation which must be crisp and intuitive.

Managing Duplicate Pages

Haven’t we all heard about duplicate content and the catastrophic repercussions? There are times when the same piece of content gets uploaded twice on a website— mistakenly. This can actually pull a website down or rather get it penalized based on SERP metrics. Google’s much-anticipated Search Console is one of the best possible tools for understanding the nefarious nature of duplicate content.

Assessing URL Structure

Our URL has an underlining effect on the entire SEO campaign. The first aspect which needs to be taken care of is the existence or non-existence of extraneous characters— including the likes of #, @, % and a few more.

Having these characters in between the URL name reduces the overall focus on the existing keywords. In case, we need to use at least one or few of these characters— it is advisable to bring in dashes and underscores for highlighting a keyword phrase. This is turn is expected to improve SERP rankings.

The next important factor is the URL length. As mentioned, most of the successful streaming websites have smaller names which in turn makes it easier for users to pronounce or remember the same. If detailed reports are to be believed, the top 100 URLs have an average of 38 characters with the nominal limit being somewhere in the ballpark of 36 characters.

The Wrap

A perfectly structured website is probably the first step towards a successful SEO campaign. If we are to segregate the process of website creation, conceptualization will surely preside over other acts. Once we are done with planning, each of the five mentioned strategies needs to be applied in a step pronged manner.

Trust me, there is also a lot more to website structure and content than just niche-specific keywords.

Digital & Social Articles on Business 2 Community(71)