AI is transitioning from a 2023 electrified by ChatGPT to a 2024 when the tech industry and the Fortune 500 will try to make new “transformer” based language models perform meaningful work and create real value. But that transition could be seriously slowed because of the way AI companies routinely train their models—that is, by feeding them large amounts of data, some of it copyrighted, that they scrape from the web.

A number of copyright lawsuits were filed by copyright holders against AI companies in 2023, but the New York Times suit against OpenAI and Microsoft, filed late last month, is the first from a major news media publisher. The case may come to be seen as a landmark that clarifies the rights and responsibilities of copyright holders and AI companies.

At issue is the fair use doctrine of the U.S. copyright statute and how it applies to AI companies’ use of information culled from the public internet to train models.

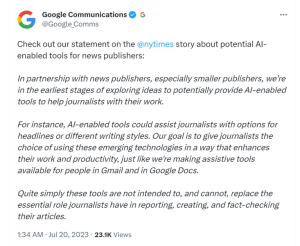

OpenAI and Microsoft will argue that, in general, the use of copyrighted data, including that from the New York Times, is covered under fair use. “Training AI models using publicly available internet materials is fair use, as supported by long-standing and widely accepted precedents,” OpenAI said in a blog post titled “OpenAI and Journalism” Monday. “We view this principle as fair to creators, necessary for innovators, and critical for US competitiveness.”

The Times lawyers will argue that the defendants’ use of its journalism stretches beyond the bounds of fair use. “One of the things that’s very important in analyzing a fair use defense is the potential harm to the marketplace for the original producer of the copyrighted material,” says intellectual property attorney Thomas C. Carey, a partner at Boston-based Sunstein LLP.

The Times complaint describes two main harms. “One is, if people can get their content for free by asking ChatGPT, then why would you subscribe to the Times?” Carey says. “And the other is that ChatGPT could spit out hallucinations that falsely attribute to the New York Times things that they didn’t write.”

“I don’t think that OpenAI has a good fair use defense here,” Carey adds. “I think that they are in some sense in competition with the New York Times as a source of knowledge.”

The two parties had been discussing a licensing deal allowing OpenAI to use Times content (OpenAI has already signed such deals with other publishers), and, the Times has stated, the lawsuit resulted from a breakdown in negotiations over the cost.

Does ChatGPT “transform” publishers’ content?

At trial, the OpenAI and Microsoft lawyers may argue that most of the output generated by ChatGPT or Microsoft’s Copilot is “transformative,” meaning that the chatbots generate “original” answers that are influenced by—but not cribbed from—their training materials. They may argue that the chatbots’ output, then, can’t be seen as a replacement for, or competition to, the Times content.

“[T]ransformative” uses are more likely to be considered fair,” advises the U.S. Copyright Office. “Transformative uses are those that add something new, with a further purpose or different character, and do not substitute for the original use of the work.”

In their training, large language models process huge amounts of text from the internet and eventually form a many-dimensional vector space that maps how words commonly relate to each other in various contexts. They generate content by processing a user’s prompt and then generating a string of words that are statistically likely to follow from the words in the prompt. Regurgitating whole pieces of content from a specific source would require researchers to direct the AI model to memorize that content during the fine-tuning stage.

But the Times complaint contains numerous examples where ChatGPT generated answers that seem to regurgitate large chunks of Times articles almost word for word. And, as the Times claims, the bot sometimes does so without citing the Times as its source. The OpenAI lawyers may have a tough time convincing the court that those responses are derivative or transformative and therefore do not violate copyright.

OpenAI says those direct regurgitations were errors. The company says in the blog post they were the result of a “rare failure of the learning process” that it is “continually” trying to fix. The company says this kind of error is more likely to happen with content that appears multiple times in the model’s training data “like if pieces of it appear on lots of different public websites.”

But that’s not the New York Times’s problem. “I think there’s a reasonably good chance that OpenAI and Microsoft are going to end up owing the New York Times a lot of money,” says Sunstein’s Carey. OpenAI and Microsoft may have to pay retroactive damages for the prior regurgitation of Times content by ChatGPT and Microsoft’s Copilot (formerly Bing Chat), he says. And it’s likely the defendants will have to either stop using Times content for model training or enter into a licensing agreement to continue using it.

OpenAI and Microsoft could also be directed by the court to make adjustments to their models to prevent them from regurgitating Times content. OpenAI has already made changes to its DALL-E image generation models to prevent the tech from mimicking living artists.

Setting precedent

The AI companies may also have weakened their fair-use argument by already agreeing to pay some publishers for their content (and by entering licensing negotiations with the Times), says Katie Gardner, a partner at the law firm Gunderson Dettmer. OpenAI has already struck such licensing deals with the Associated Press and (Politico owner) Axel Springer. “As [publishers] find ways to monetize it through licensing, then it’s going to be much harder for those [AI] companies to say ‘hey, it’s fair use for me to just take it and use it for free’,” Gardner says.

Publishers have seen their profits squeezed by social media and by restrictions on the way interactive ads can be targeted. They’re naturally very interested in finding new ways—beyond advertising—to monetize their content. Licensing content to AI developers may be the key to their survival as consumers get more of their news and information from AI engines.

Meanwhile, OpenAI and Microsoft are not short of cash. Microsoft put $10 billion into OpenAI a year ago. OpenAI is reportedly preparing to raise more money, at a valuation as high as $100 billion. And those companies certainly aren’t alone. With ready access to billions in funding, many AI companies will see the publisher licensing agreements as a good way to manage risk and stay out of court.

The potential cost of the licensing deals likely won’t cool the ardor of the VC community for new AI investment opportunities. “All I do is work with venture-backed companies and VCs that invest in them, and this litigation has not slowed down any of that a bit,” Gardner says. “I think [VCs] are taking the bet that these companies are going to be successful notwithstanding the litigation.”

They may also be counting on the likelihood that the Times’s case against OpenAI and Microsoft will take years to play out. “In some of the big copyright cases we’ve seen it’s taken nearly a decade from start to finish,” Gardner says.

And there are other potentially consequential things going on too. The U.S. Copyright Office is now soliciting commentary on whether to, or how to, recommend that Congress update the copyright law for the AI age. But that—especially with the chaos in the lower house—could also take a long time to materialize.

“I don’t know that we can wait that long; they have to come up with some type of solution,” Gardner says. “From a policy perspective, the United States doesn’t want companies going abroad to other places that have publicly stated that they are going to be much friendlier on this stuff.”

Indeed, OpenAI seems to nod at this idea in its blog post: “Other regions and countries, including the European Union, Japan, Singapore, and Israel also have laws that permit training models on copyrighted content—an advantage for AI innovation, advancement, and investment.”

With hundreds of tech companies now sinking big R&D dollars into new AI models, and thousands more startups building businesses on top of existing foundation models, any clarity provided by the outcome of the lawsuit would be welcome. If uncertainty in the law persists, and major copyright lawsuits continue being filed, investors might eventually begin to look for less risky businesses to invest in.

The worst result of all this could be that only the largest and richest of the AI companies can afford to pay for big licensing agreements, leaving smaller companies with inferior training data, and further concentrating control of the most powerful foundation models in the hands of a chosen few.

(11)