Elon Musk announced this week the launch his new AI company, called xAI. The company, which already boasts an extremely talented staff, will presumably develop large language models (LLMs) and may represent Musk’s desire to create a challenge to current AI leader OpenAI. Musk was one of the founders of OpenAI in 2015 but disconnected from the company in 2018 and has since openly criticized its business model and leadership.

On the company’s website, xAI says it seeks to “understand the nature of the universe.” That short mission statement suggests the company may train LLMs to explore bigger, scientifically complex questions. The LLMs may be trained, for example, to model super-complex phenomenon such as dark matter or black holes, or to model complex ecosystems now suffering due to climate change. Using LLMs as such is regarded by many in the science community as an area of high potential.

Bias risks

Few details were given about xAI, but we now know the names of the core team of researchers and AI practitioners tapped to build out Elon Musk’s vision for artificial intelligence. The xAI website lists 12 men and zero women as the core group of researchers. The group is advised by Dan Hendrycks, director of the Center for AI Safety

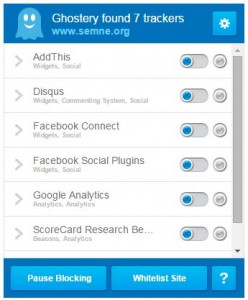

That lack of representation matters, as LLMs are very prone to reflecting and regurgitating bias from their training data. LLMs are trained on language, not necessarily facts, usually scraped in large quantities from the internet. Scrubbing out the bias and inaccuracies takes countless hours of work and judgement by human beings. With a “free speech absolutist” at the top of the organization, how rigorously will xAI remove gender bias from the training data? How seriously will it consider putting guardrails around its models to prevent them from repeating biases in their responses? If the training process looks anything like Musk’s Twitter moderation policies, xAI’s models might talk like, well, Musk. In serious, real-world AI applications, that doesn’t work.

Advantages

On the other hand, the males on xAI’s team have some significant experience at pedigreed organizations behind them. They come from places like Google DeepMind, OpenAI, and the AI research program at the University of Toronto. They are all well published and seem capable of pushing the frontiers of current AI research well past today’s limitations.

They will likely have the resources they need. The group will be generously funded by Musk. They will presumably have access to researchers and engineers who are already working on AI at Tesla and SpaceX. They will presumably have access to the copious amounts of computing power. Musk, recall, reportedly purchased roughly 10,000 GPUs earlier this year for the purpose of powering a then-unnamed generative AI project within Twitter.

LLMs must be trained on sizable chunks of organic text on a wide range of topics. That’s why OpenAI trained the models underpinning ChatGPT on Twitter conversations. Musk has already taken steps to prevent other companies from accessing that rich source material for training language models. If Twitter data is walled off so that only xAI can use it to train models, that could become a major arrow in Musk’s quiver.

Is it safe?

AIs, like nuclear weapons, are potentially super-dangerous to humans. They could destroy jobs in the near term, and even pose existential threats to humanity itself in the future, many believe. Earlier this year Musk signed a letter calling for a “pause” in the development of LLMs for those reasons. Now the unpredictable (and often downright strange) tech tycoon will be directing the development of powerful generative AI models. This alone might renew the push for more regulation around how large AI models are built, trained, and used. While the EU is quickly moving a package of regulations toward passage, the U.S. seems far from passing a bill, despite urgings from the White House to do so by 2026.

I’m hesitant to say that xAI is merely a vanity project for Musk. The company isn’t likely to challange OpenAI in LLMs very soon, but there are other models that can be developed and applied in meaningful ways. The main concern is safety. Musk might well get so swept up in the AI arms race now underway that he directs his team to cut corners on safety.

(3)