Marketing gurus across time, space, and dimension herald the unceasing value and absolute necessity of testing.

Unless you plan on thriving by mere luck alone (we wouldn’t recommend that strategy), testing is a truly essential tactic for apps. You can test an endless number of variables to help your app reach its ultimate form (for some examples of what you can test, check out our Ultimate Guide to Mobile App A/B Testing).

Testing helps you learn, improve, and grow. It’s the key to success, as you’ve doubtlessly heard so many times before.

But the truth is, while what you test is certainly important, how you test may be even more critical. If you don’t set up your testing properly, it’s much more difficult to correctly measure results.

1. Test One Thing At a Time

Each A/B experiment should test one single variable.

If you test multiple variables in a single test, you won’t know which variable accounts for the change. This can be trickier than you might think. For example, imagine that you want to test how including an emoji affects engagement with a push message.

Not only should the two messages have the exact same copy and punctuation, but you’ll also want the emoji and non-emoji version to be pushed at the exact same day, same time, and to the same audience segment.

In order to test multiple variables, you’ll have to run separate tests for each change you want to try out.

2. Use Control Groups For Better A/B Testing

Control groups are by far the best way to cement trust in your A/B testing results. Control groups ground your tests, letting you measure your experiments against reliable footing.

What is a Control Group?

A control group is a neutral segment of your testing audience that is unaffected by your experiment.

In scientific studies, the control group doesn’t get the placebo pill or the experimental medicine, they just continue with their status quo existence (the mice control groups are probably the luckiest of the gang, continuing their cheese-munching lives un-harassed).

For app marketing, control groups are neutral ground, serving as your baseline to compare experiments against.

If you’re testing a push message, they don’t get it.

If you’re testing a new in-app message, they don’t see it.

To better understand how control groups work, imagine that you’re testing a push message to send to users who have read four articles in your app.

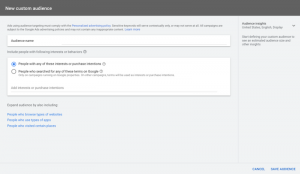

You have two different versions you want to try out. Half your audience gets version A, and the other half gets version B. However, it’s not a straight 50/50 split – you also set aside 5% of your audience to get no push message at all.

You don’t want your control group to be your entire app user base – the control group should be a small segment of your qualified audience that acts as a baseline benchmark for comparison.

That tiny separated segment is your control group, and they will provide the foundation for your measurements.

Why Control Groups Are Essential For Reliable Data

Without a control group, you’re testing blind with no perspective. You may see numbers increasing, but what are they increasing against?

It’s the control group that allows you to make sense of your data. Without perspective gained by a control group, it’s easy to misunderstand what you’re seeing.

M.C. Escher’s “Relativity” shows the importance of perspective

After your push messaging test is finished, you can look at your results. How did the variant groups compare against the control group? Maybe Message A performed better than Message B, but both had a lower conversion rate in comparison to the control group.

Enabling a control group in each push or in-app messaging campaign you run allows you to see the true impact of that campaign.

CTR Won’t Get You Very Far – Measure Other Stuff Instead

Marketers have been obsessed with click-through rates ever since they became trackable. No one is denying that CTR is important – they show you the clickability of your copy and help you figure out what text and design resonate best with a user.

However, we have a lot more data than we used to in the advent days of CTR. Today’s app analytics platforms have the power to measure a multitude of complex metrics like sessions, transactions, and custom events like articles read, check-ins, or levels completed.

In fact, advanced mobile analytics tools can even follow users past the click, on to their point of conversion, and even track what they do after converting. Do they continue to use your app and buy again? What does their projected LTV look like? Amazingly enough, analytics can give you these answers.

If we didn’t have all that rich user data, click-through rates might be the best we could do. That’s not the case though – today we can measure an endless number of metrics, and those can be used to assess engagement and retention rates, which are a better measure of business impact than simple CTRs.

If You Had to Choose CTR or Retention, Which Would You Choose?

You may be thinking – well of course I care more about retention than CTRs. But they go hand in hand, don’t they? If users are clicking push messages, they will want to stick around, right?

The unsettling reality is that CTRs and retention don’t always correlate, especially with sensitive app marketing tactics like push messages.

Even with carefully timed campaigns, many users will receive your push messages while they’re on the move. A user may see your message but not click on it at that moment, choosing instead to visit your app later on, at a more convenient time. A message with a low click rate could still end up having a positive impact on retention.

Instead of putting all your trust in CTRs, you’ll need to measure with metrics that relate to your unique app needs and goals.

What impact do you want your campaigns to have? Do you want more app sessions, more transactions, longer session lengths? How do those goals compare against broader relationship-strengthening goals like retention and engagement?

Looking at click-through rates in a silo is a poor way of testing. Instead, test strategically with goals that connect to your business.

Best Practices Breakdown for App A/B Testing

We’re summing up what we’ve discussed today in a quick best practices breakdown.

- Only Test One Change At a Time. Make sure you only implement one change per A/B test for reliable results.

- Always Use a Control Group. Control groups help you accurately evaluate your testing results – use them in testing whenever you can.

- Look Beyond Click-Through Rates. When it comes to app A/B testing, don’t get too caught up in CTRs. Instead, take a big picture look at your long-term goals and how your experiments affect higher-level metrics.

Do you have any A/B test techniques you want to share? Tell us your testing tips in the comments below!

Digital & Social Articles on Business 2 Community

(139)