Uncover common issues when measuring digital ad performance using views, clicks, attribution and other metrics.

Online advertising seems like the perfect mix of creativity and data. Marketers can test various styles of ads and get quick results. Almost every aspect of digital advertising is measurable. It’s “data-driven marketing” — unless the numbers are mostly B.S., which seems increasingly likely.

I recently spoke with a professional colleague who worked in the ad space for years. He discovered discrepancies between reports from various sources, including Google and contacted Google to resolve the problem. They told him if the discrepancy wasn’t more than 20%, it wasn’t worth looking into. That’s a pretty big error bar in your advertising data, but it turns out to be small potatoes compared to what other people are finding.

Some very sad figures

Only 36% of ad spending on demand-side platforms actually reached the advertiser’s intended audience, according to the Association of National Advertisers (ANA). The ANA report on the programmatic supply chain found that a high percentage was spent on ads that were nonviewable or displayed to bots.

Bob Hoffman, author of “Adscam,” says the numbers are far worse than that. He estimates that 3% of digital ad spend corresponds to actual ads seen by humans.

Note that numbers are being reported each step of the way in the advertising process. It’s all very “data-driven,” but the data might be junk. What’s going on?

We all know the famous quote attributed to John Wanamaker: “Half the money I spend on advertising is wasted; the trouble is, I don’t know which half.” In Wanamaker’s day, the ad exec could verify that his ad was actually on the billboard, in the magazine or announced on the radio. Since online advertising is customized to each user, you can’t check to make sure your ad is displayed.

Advertiser: “Hey, I’m not seeing my ad on your site.”

Ad salesman: “That’s because you’re not in the target audience.”

How is it possible for numbers to be as bad as the ANA suggests? Let’s back up and ask what might be causing these radical differences in numbers, focusing on some key metrics.

Views and impressions

Advertisers want their ads to be seen by the correct audience in the correct context. Each of those elements can go wrong for many different reasons. Here are some of the top culprits.

Non-viewable impressions. These occur when your prospect goes to the right page, and your ad is “displayed” on the page, but it’s on a section the prospect never sees. While there are efforts to combat this, ads can still be counted as “viewed” when the ad was never displayed to the visitor.

The ad is on the wrong site. You want your ad to display on a major media site, but it’s actually displayed on affiliate sites that don’t attract your core market. This could be caused by human error in the ad setup or confusion on the back end, but it does happen. The ad is displayed, but it’s not in the right context and possibly not to the correct audience.

Fraud. One of the most significant issues in online advertising is ad fraud, which includes:

- Using bots or automated scripts to create fake impressions.

- “Ad stacking,” where multiple ads are placed on top of each other in a single spot so that only the topmost is visible.

- Pixel stuffing, where a large ad is stuffed into a 1×1 frame.

I know what you’re thinking. “My rep is an honest guy and I’ve known him for years.” OK, but even if your rep is a saint, he’s only one cog in a very complicated machine that no one really understands from end to end. This machine includes good and bad actors, like countries that get most of their foreign currency revenue from various kinds of online fraud.

Repeat impressions for the same user can be counted, which skews reporting. Again, adtech can and should compensate for this, but there’s no guarantee.

In addition to all this, there are problems related to geographic irrelevance, different criteria for counting impressions on different platforms and regular old human error or technical problems.

What does this all add up to? Hoffman tries to lay it all out in his book, but I think the honest answer is only God knows.

The problems with clicks

Simply viewing an ad can have some effect on the web visitor and many advertisers promote the idea of “view through conversions.” Clicks are online gold because they send your prospect to your chosen page. Or so you hope. There are still problems.

Click data can be corrupted by bot-enabled fraud, accidental clicks, redirect loops, traffic from proxy servers and plain old lying.

The good thing about clicks is that clicking on an ad sends the visitor to your page, where you can parse the traffic yourself. If you have bot detection technology, you can filter your traffic to better understand how many humans your ad attracted.

There’s still room for error. If the user leaves a page before the tracking code fires or if the tracking code malfunctions, there will be a disconnect between clicks and page views.

The challenges of attribution

The “rule of seven” is a popular concept in marketing. The idea is that someone needs to hear or see a marketing message at least seven times before they take action on that message.

If that’s true, which of those seven touchpoints should get credit for the sale? It’s a silly question, but it’s fundamental to online marketing.

This is partly why Tim Parkin calls marketing attribution “the fog of marketing” in his article “Measuring the invisible: The truth about marketing attribution.” Aside from these conceptual problems with attribution, technical issues can make attribution statistics misleading.

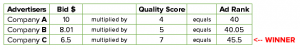

Different advertising platforms use different methods of tracking conversions, which leads to discrepancies. One system might track a conversion within a specified window after an ad view or click. For example, any conversion within 30 days after viewing or clicking on an ad can be counted as a conversion. Other systems use last-click attribution or variations on that theme.

Optimizing ad spend means doing more of what works and less of what doesn’t. If the prospect has to see seven ads before taking action, how do you know which ad is “working”?

Do you believe in advertising?

If the data is mostly junk, which may very well be true and if attribution is a bit of a myth, which also seems true, does that mean we should abandon advertising?

Certainly not. Wanamaker continued buying ads even when he didn’t know which ads worked. Further, we all know from personal experience that ads influence our purchasing decisions. Advertising works.

The question for the modern ad buyer should not be “Do you believe in advertising?” but “Do you believe in adtech?”

Adtech offers a lot of data, which goes nicely into spreadsheets and allows us to make predictions, charts, etc. But some of that data has a lot of smoke and fog, even downright deception.

Maybe all the metrics are misleading us more than they’re helping us, and perhaps it’s time for modern ad buyers to read some old books on advertising and get back to basics.

The post Online advertising: The funny, fuzzy math appeared first on MarTech.

MarTech(5)