After announcing last October that Google does not recommend the Ajax crawling system they launched in 2009, SEO strategists and web developer throughout the country are wondering whether or not Google’s search crawlers can effectively pull AJAX content.

What Is AJAX?

AJAX is a way for browsers to pull in new content without refreshing the page or navigating to a new one. It relies on JavaScript to do so.

The content can be as simple as a paragraph of text or as complex as the HTML code for a completely new page.

Traditionally, web developers used it to create Single Page Applications (SPA), using popular frameworks like React and AngularJS. By using AJAX, the website developers are able to create a seamless experience that feels like a dedicated desktop application. Examples are “apps in browser” like Trello, Twitter, Gmail, Evernote’s web version, etc.

In general, the HTML content within a SPA does not load into your browser when you initially land on the site. Developers use AJAX to “talk” with the site’s web server. Based on that information they then use JavaScript to essentially create and render the HTML that is necessary for the page to become accessible and useful to the user.

This is called client-side rendering where the browser and its programs do all the work of creating a page.

The Relationship Between AJAX & SEO

As long as AJAX is used for application-like websites, not meant to be indexed by Google but to be used by users, there is no problem.

But issues arise when SPA-based AJAX is used to deliver traditional “must be indexed by Google” content web sites.

Google has been able to crawl portions of content that use JavaScript for some time. In fact, it is well documented that Google’s search crawlers are able to crawl and recognize metadata, links, and content that’s created with JavaScript.

But unfortunately websites that are built with pure SPA AJAX frameworks are extremely difficult to get indexed.

Google’s Initial Solution

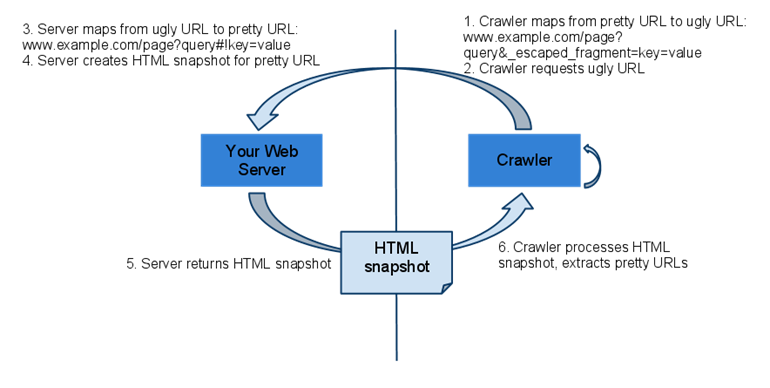

Back in 2009 Google developed a solution for SEO strategists struggling with AJAX by creating a method where the search engine can obtain the pre-rendered version of a page instead of the empty framework page.

Essentially: instead of seeing the JavaScript that eventually will create an HTML page, Google is served a pure HTML version of a page. The end result, so to say.

Systems that help with the server side pre-rendering include prerender.io and brombone.

Since Google has proposed the solution there has been a spike in websites that allow Google to crawl their SPA AJAX content.

Google Deprecates Its Solution

Google announced on October 15, 2015 that they no longer recommend the AJAX crawling scheme they released in 2009.

The proposed solution is now deprecated. When — or if — Google will sunset the solution is not known.

While for the moment Google does still support their previous solution, they simply no longer recommend that developers and designers use this specific approach for new sites.

This announcement has led to the question of:

“Is Google Now Able To Completely Crawl AJAX?”

The key is in the word “completely” and the short answer is: no.

Experience shows that websites that use a SPA framework or otherwise rely heavily on AJAX to render their page often find only a few, partially indexed, pages of theirs in Google’s cache. The larger the website, the more pages will be missing.

For sites like this, HTML snapshots (server-side pre-rendering) remain the most effective way to get their content into Google.

In general, content delivered to the browser in the first page load is what Google renders, sees, and indexes: content pulled in dynamically into the page at a later point is not seen.

Google’s New Advice On Using AJAX

In the same announcement Google states that they are “generally able to render and understand your web pages like modern browsers.”

But their choice of words, “generally able to”, has left many industry strategists concerned.

And in fact, Google continues to recommend sites use progressive enhancement where the base experience of the site can be seen by any pure HTML browser, theirs included:

“we recommend following the principles of progressive enhancement”

— Google Webmaster Central Blog, Oct. 2015

“Use feature detection & progressive enhancement techniques to make your content available to all users”

— John Mueller, Webmaster Trends Analyst @ Google, 2016

What You Can Do With This Information

There are a number of tactics web developers and SEOs can utilize in order to ensure that their landing pages are able to be crawled, indexed, and accessible to qualified organic users.

These tactics include:

1. Pre-rendering HTML Snapshots

Although Google no longer recommends using this particular tactic, they are still supporting it, which is important to know.

This particular system works well, although developing the code that allows you to pre-render and serve up each snapshot can be tedious and difficult if you aren’t experienced creating this.

If you are not well equipped to handle this task, try looking around for vendors that are designed to accommodate these requests.

2. Progressive Enhancement

Start with the basic HTML web page experience that anyone will be able to see and use.

Then, using a technique called feature detection, add enhanced capabilities to the page; layering them “on top” of the traditional, all-round HTML code.

This is the Google recommended way to go.

3. Graceful Degradation

The inverse of progressive enhancement. Start with the higher level, enhanced capabilities that humans with modern browsers will experience. Then, work your way back to delivering a more basic but still usable version for feature limited browsers such as search engine crawlers.

Hand-Picked Related Articles:

- What Is Unobtrusive JavaScript? (Part of Progressive Enhancement)

- How Many Users Have JavaScript Disabled

- How To (Temporarily) Disable JavaScript In Your Browser so you can see how Google might see the page

* Adapted images: ![]() Public Domain, pixabay.com via getstencil.com

Public Domain, pixabay.com via getstencil.com

No, Virginia, Google Doesn’t Index AJAX Sites

The post No, Virginia, Google Doesn’t Index AJAX Sites appeared first on Search Engine People Blog.

Search Engine People Blog(71)