It’s my impression that COVID-19 took an already-competitive business environment and brought it to a boiling point.

Many factors contributed to the upending of the world economy, including millions of lost jobs, slashed discretionary income and the massive leap millions took to make a living online.

At the same time, data analytics has increased in importance and the fortunate few that are unlocking its power are making it through this epic shift. From my viewpoint here at Oxylabs I have seen this happen across various industries and can offer some insights into surviving the post-COVID-19 business environment.

The Economic Impact of COVID-19

Let’s first assess the big picture. COVID-19 has prompted governments across the world to enact policies that have enforced lockdowns, closed borders and disrupted supply chains. This has resulted in what is widely considered to be one of the most serious recessions of all time, resulting in the loss of nearly 400 million full-time jobs and a 14% drop in global working hours in the second quarter of 2020 alone.

The implications of all this are clear: there is less money being spent, more of it is being spent online, and there has never been a more important time to harness the power of big data than right now.

Fortunately, the industry is still maturing and there is room for growth for almost any business that wants to unlock the power of data to enhance their business strategies.

Is Data Really the New Oil?

Data has been dubbed the new oil by some analysts while others believe this is a simplistic analogy. My view is that the answer is somewhere in between – that data can be valuable like oil, however it must be processed properly to be useful.

The extraction of data can be as simplistic as manually plugging in data from the web into a spreadsheet. An example that comes to mind is an individual doing price and feature comparison on a laptop prior to purchasing.

Now imagine extracting that same type of information from hundreds of websites in seconds. That’s precisely the power provided by modern data extraction techniques like web scraping.

Web Scraping: A Weapon of Choice in the Post-COVID-19 World

Web scraping uses software (or “bots”) that crawl the web using predefined websites’ URLs and keywords to search for information. It’s a very intense and complicated process that can tax server resources due to the massive number of simultaneous requests.

Servers fight back, however. Almost everyone has experienced this whether they realized it or not through CAPTCHAs asking them to confirm their humanity by clicking the ‘I am not a robot’ box.

These defense tactics are just one of many measures taken by servers to prevent overzealous scraping activity that undermines website performance.

Proxies Makes Web Scraping Possible

When a server sees the same machine (defined by its unique IP address) making multiple simultaneous requests it often gets triggered to block that device.

Proxies provide a solution that circumvents these IP bans by associating each proxy (with its own unique IP) to each data request. In that sense, proxies mimic “human” behaviour while masking the identity of the party extracting the data. Their function as intermediaries is what makes web scraping possible, and its uses continue to increase as different industries across all verticals uncover new ways to unlock the power of public data.

Web Scraping Use Cases Continue to Grow

Awareness about the importance of high-quality data extraction is growing as more businesses worldwide employ web scraping practice. The following is just a sample of the ever-expanding list of use cases:

- Obtaining product and pricing information for consumer goods websites, flight and accommodation pricing for travel aggregator websites

- Catalog, stock and shipping information for e-commerce sites

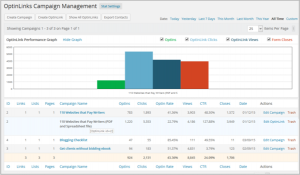

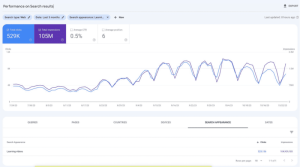

- Search Engine Optimization (SEO) intelligence

- Extracting details from profiles on public career-oriented websites by recruitment agencies

- Monitoring consumer sentiment and gauging success after the activation of marketing campaigns

- Brands tracking social media mentions (including the competition)

- Anti-counterfeiting and brand protection identification

Valuable Insights Can Make Competition Irrelevant

Just like with conventional mining, data extraction can provide treasure in the form of valuable consumer insights. Used in conjunction with strategic marketing campaigns, this valuable intelligence gives businesses an edge over their competition in several ways that include:

Winning the Price War

Web scraping is commonly used by marketers to obtain detailed intelligence for pricing strategies in the consumer goods, hotel and flight industries. Besides extracting data for pricing information, marketers can also use it to spot new trends.

Along with giving valuable insight into their own product and pricing strategies, scraping can also be used to monitor the competition and extract valuable intelligence for use in future campaigns and product development.

Market Sentiment Analysis

In the past, marketers relied on consumer surveys to gauge the success of their products or services. This data was often difficult to obtain and required incentives to encourage people to participate.

Today web scrapers can scour the internet with predefined keywords (like a brand name) to extract valuable information from comments and posts made on public websites. This type of information is especially valuable after the activation of a marketing campaign in order to gauge its effects and to formulate forward-thinking strategies in response.

Monitoring the Competition

In times of war, armies would send out agents to spy on their enemies. Today scrapers can be deployed with no risk whatsoever to track the activities of the competition.

Valuable insights on products, prices and overall consumer sentiment can be obtained by extracting data with the right keywords. This type of data is invaluable for companies marketing current products or engaging in future product launches. It’s also useful for performing comparative analysis between a company’s products and prices and those of the competition.

Ethical Web Scraping

It is crucial to understand that web scraping can be used positively. There are transparent ways to get the job done so individuals and businesses can get the data they need to drive their businesses forward.

Here are some guidelines to follow to keep the playing field fair for those who gather data and the websites that provide it:

- Only scrape publicly-available web pages.

- Ensure that the data is requested at a fair rate that doesn’t compromise the server or is confused for a DDoS attack.

- Respect the data obtained and any privacy issues relevant to the source website.

- Study the target website’s legal documents to determine whether you will legally accept their terms of service and if you will do so – whether you will not breach these terms.

Advanced Web Scraping Tools Provide Greater Success

As mentioned earlier, datacenter and residential proxies make in-house data scraping possible by acting as intermediaries between the requesting party and the server. The choice of either type depends on the business use case with residential proxies being most suitable for especially challenging data targets and/or specific geographic locations.

Not every company has the resources to conduct data extraction in-house. In those cases, outsourcing a trusted solution is ideal because it can free up resources to focus on data insights rather than being overloaded by challenges associated with data acquisition.

Sourcing proxies in a transparent and ethical way is also very important. For instance, sometimes residential proxies are obtained through apps downloaded by users unaware their device is being used in a proxy network. Ethically procured proxies where permission is obtained and the users are rewarded is not only fairer, but also provides a better web scraping experience due to increased stability and performance – all factors that translate into greater web scraping success.

A Final Word: Surviving the Post-COVID-19 World

COVID-19 has drastically altered the economy and made our business environment more competitive than ever. Web scraping can be the weapon of choice that ensures a business survives to the next level.

Besides monitoring prices, the intelligence gained from web scraping can be used to gauge consumer sentiment, track the competition and activate marketing campaigns that zero in on the needs of your target market.

The importance of data is always increasing and web scraping is rapidly becoming the essential tool needed to stay competitive in the post-COVID-19 business environment. The right tools result in better data and can increase profitability and success on an increasingly competitive business landscape.

Business & Finance Articles on Business 2 Community

(31)