By S.A. Applin

We’re breaking the web and it’s unlikely we can fix it. Websites work, but their underlying data management doesn’t seem to work all that well. This didn’t happen suddenly.

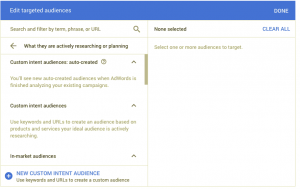

Over time, the web has built on top of itself, and with the relatively recent addition of more automation-controlling functions, it seems to have gotten a lot worse. While some believe that the web will eventually eat itself from perpetual “AI learning from AI,” the news for the rest of us in the present is that the web is already “eating” us in little erosive bites. Sure, it’s taking our data and doing things with it too, but I’m focused on the millions of daily little processes, interactions, and errors that are messing us up by breaking connections, returning wrong information, and otherwise rendering our data useless at any given moment.

These errors take our time, cause frustration, and in aggregate, are breaking the way information moves in our society. When we rely upon servers and algorithms for many tasks and they malfunction, our data access is gone. Then we are stuck having to manage without critical information until it is restored or repaired, or we find a work-around. That translates into lost hours of productive time, lost sales, and wrong assumptions that make it nearly impossible to complete tasks. And AI is not going to resolve these problems, either—no matter what the companies who think that they have the most to gain from deploying it, may tell us.

Silicon Valley technology was based on a philosophy of “green fields” and “blue skies,” defined as the idea of unrestricted expansive areas for innovation and change. When the (now) elder titans of the industry began to develop upon the Internet and web, they were green fields—comparatively burgeoning research projects. Over decades, many people contributed to expanding these technologies rather rapidly.

The web is now a middle-aged, legacy system. While there seems to be infinite “room to grow,” the titans continue to want to develop green-field technologies like the Metaverse and AI, where they think that they don’t have to consider the broken complicated legacies of the past, or hire the social scientists and others to help them keep these processes social so they won’t keep breaking for people who do not tend to do things in mechanized ways.

But it isn’t that easy. Any “green field” technology that utilizes the web will have to integrate with it—and us—in a social way, beyond being trained to “chat.” AI is being touted as the panacea for all digital ails by those who created the early web and are out of the digital “green fields” they need to survive. AI isn’t one technology, it’s many, and interoperability and integration are not what Silicon Valley likes to do. These titans can’t use AI to fix all of the broken processes and data interactions they’ve created. This is partly because AI is trained on the systems and data that were already broken, and partly because there isn’t one AI, there are many. And they are from different vendors and trained on different models—and we don’t necessarily have interoperability between those, either.

Because of this, security becomes a challengingly complex task, which is why there are hacks and leaks. While the rest of us just want to get through tasks without being stopped by some bad data processes or hacks, until each company thinks carefully about the processes it is automating and how, and what that communicates with, and to us, I fear the outages are going to increase. Also, it is worth noting that any shiny new “greenfield” technology will still need to connect to the broken web.

People can hold inflexible, rigid, and spotty systems together, because we uniquely have the ability to be flexible in dynamic ways. We can see solutions to problems, and are willing to try things to see if we can creatively work out various problems. Computers can’t do this as easily outside of concepts that are bounded, like a chess game or something that has finite rules.

If we remove people from the front- and back-end interaction with websites and data, as many companies are doing, we will continue to see structural holes in nearly every interaction. For AI to work, it needs to cooperate with people, and with the legacy web and Internet—as well as with other AIs. But even that won’t fix all the broken mechanisms, technologies, processes, and automations that are running (and ruining) interactions.

In our daily use of the web and its back-end matrices of computers cobbled together from the very start, we often hit interaction snags, or systems that can’t accommodate our needs. For most of us, our Internet usually connects us, but our data, and our access to it is becoming increasingly unreliable—just like the changes in our weather with climate change, (which also causes data outages).

We’re not prepared for our data to be unavailable, inaccessible, or wrong. As we continue to automate, and move people out of service or oversight monitoring roles, this conflict between efficiency vs. maintaining flexibility to solve problems in real time, will only get worse, as people are the ones providing the flexibility within these fairly rigid systems.

Deliberate misinformation and propaganda campaigns aside, we’ve created a plethora of unintended misinformation. Most of the time the issues that are frustrating to us are interactions where the people designing the system hadn’t thought ahead enough to make it flexible. As recently as a few years ago, when something wasn’t correct, we had known mechanisms to address the issue. There were phone agents to call and work-arounds. But these days, those folks are being replaced with AI-driven chat agents, which have their own limitations.

Often enough, when we try to call to get something sorted out that hadn’t been considered by a company, we have to go through a chat agent, which might eventually transfer to a backed-up live agent. We may have to leave our first name, last name, and email and trust an online form that promises us a response in a few days. The companies are saving time with automation, but our time is being stretched out over days waiting for responses so we can complete tasks. When we’re contacting a company about something critical, a timely response is crucial. The wait and delays for companies to sift through emails and get back to us wastes our time, and risks connections, cooperation, and other societal cohesions that keep our work and activities functioning. This fragmented and asynchronous communication makes it hard for us to do things both on and offline, because it isn’t just one system that is “data broken,” it is all of them—but in different ways.

These issues are constantly happening to all of us. Last week, I was trying to purchase earthquake insurance, and the company had a problem with its auto populate feature for our names. It put half of each name in two separate fields, creating two new names that were combinations of each of our names. The form was generated automatically and sent for us to sign. When I flagged it to the agent, whom I could contact, she told me that there were safeguards in place preventing her from correcting the names. Each subsequent person I spoke with at my insurance company, after going through automated agents and getting routed, could not correct the errors on the form. It ended up taking a week, seven hours on the phone, and six separate people to sort out. This was a single data field population error. Imagine that in aggregate.

The week before, I was working with an online company who had advertised a benefit I wanted. I wrote to the online form address and was told by the (human) agent that the benefit didn’t exist and was not listed on their site. I had to track down the CEO on LinkedIn and email them to get an answer. It turns out the benefit I wanted did exist, but didn’t appear on the web text, and instead was part of the chatbot’s responses—an unsearchable third-party source. These types of disconnects are happening more frequently as company knowledge is allocated between people and automated services, and as people are increasingly removed from the equation, knowledge becomes lost.

These incidents are only a few of many daily blocks, errors, and problems that are happening on the web every day. Many of us have had to abandon handling important tasks online because they can’t get resolved. This could be due to software algorithms not being programmed to handle our contextual needs. Or, it could be that these companies are not aware that we have needs outside of what their designers projected onto the personas they use in place of doing enough qualitative research with real people. These, in combination with an overreliance on the quantitative metrics that count performance, efficiency, and tracking of engagements, could give false information to companies that we are getting what we need, when we aren’t.

And there are increasingly fewer options for us offline if we get frustrated and try to solve our problems elsewhere, as people become replaced with algorithms.

Recently, 23andMe announced that hackers had gained access to users’ personal data. It took 23andMe a week to require “all users to reset their passwords ‘out of caution.’” A week is a long time not to be notified, and many claimed not to ever have received the email notification. Around the same time as the 23andMe snafu, Service Now was found to have had a vulnerability since 2015 that was “a misconfiguration” that “puts records into tables that are easily readable.” Potentially, bad actors could have raided Service Now’s customer data for years. We can’t even fully assess the damage once data is compromised because we have no idea where it goes and how it is used by those who’ve taken it.

We may think that Internet outage “hacks,” like those that hit Las Vegas, are few and far enough between that they mostly aren’t our problem—as these hacks illustrate sudden, rapid information outages to large institutions that we may not personally patronize. But even if those institutions don’t process our data, they may process the data of our friends or relations, or companies we use, and we are sometimes caught up in those data profiles leaks, losing privacy and functionality when they are compromised or go offline.

Some hacks and breeches cause a different kind of outage and damage that we are not prepared for—or insured against. In the case of the Las Vegas hacks, part of the offline infrastructure that is computer controlled also went down. Vending machines, gambling machines, cash registers, food ordering systems, and more stopped functioning, and workers scrambled to try to do their jobs without a back-up strategy in place. They found paper and pens, but they had to downshift to a much slower process of offline transactions, exact change in cash, etc., all thrown together in the moment. There wasn’t a “disaster plan,” yet many of the systems that go down in a disaster were affected. This “chasm crossing” means that we aren’t always immune to what is happening “elsewhere;” it’s often connected and increasingly generating more physical repercussions that will impact neighborhoods, communities, and cities.

People need to connect to the current web, which is a cobbled-together system of any-age servers, stacked up like a house of cards, with each breaking in its own way. Automation does help us to manage a lot of very complex processes, but it is best used where it can count and measure things, and regulate quantitative processes in a bounded finite-rule way. People have social and sometimes complex needs that require context, which, unfortunately for the titans, automation and AI cannot yet address.

S. A. Applin is an anthropologist whose research explores the domains of human agency, algorithms, AI, and automation in the context of social systems and sociability. You can find more at @anthropunk, PoSR.org, anthropunk@bsky.social, and @anthropunk@federate.social.

(1)