Knowing you’ve been hit by a negative SEO campaign is crucial to fighting it. Contributor Joe Sinkwitz outlines the tools and steps you can take to figure out if you’ve been targeted.

Have you ever experienced a rankings decline and suspected it was due to something a competitor was doing?

For this second article, we are going to focus on the process of diagnosing whether or not you’ve been hit by negative search engine optimization (SEO) techniques.

If you need a refresher or missed the first article, here it is: What Negative SEO is and is Not.

As you progress through the following steps to try and diagnose what happened, you’ll need to honestly ask yourself whether the decline you’re facing is more a result of your own actions or due to someone acting against you.

It’s an important distinction; your first inclination can be to assume someone is out to hurt you, while it might actually be something as simple as accidentally no-indexing your index, disallowing critical paths in robots.txt or having a broken WordPress plug-in that suddenly duplicates all your pages with strange query parameters and improper canonicalization.

In the first article, I segmented the majority of search signals into three buckets: links, content and user signals. In order to properly analyze these buckets, we’re going to need to be able to rely on a variety of tools.

What will you need?

- A browser with access to Google and Bing to find content.

- Access to your raw weblogs to review content and user signals.

- Google Analytics to review content and user signals.

- Google Search Console to review content, links and user signals.

- Bing Webmaster Tools to review content, links and user signals.

- A link analysis tool to look at internal and inbound link data.

- A crawling and technical tool to review content and user signals.

- A plagiarism tool to review content.

Let’s step through the different tools and scenarios to determine if you were hit by negative SEO or if it’s just a mistake.

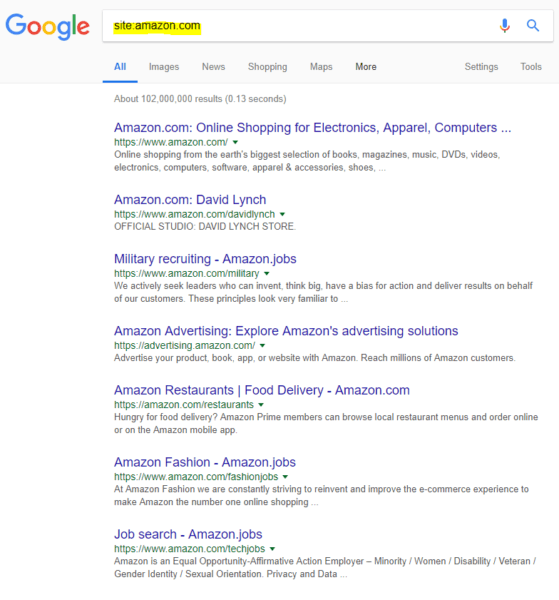

How are Google and Bing treating my site?

One of the simplest and easiest first steps to take is to check how Google and Bing are treating your site.

I like to use both engines in every audit because they react differently, which helps me quickly diagnose a problem. What are we looking for?

- Site:domain.tld. Replace “domain.tld” with your actual domain. Both engines will return a list of pages from your domain, in a rough order of importance.

- Are pages missing that you would expect to see due to their value? Look at the source code and robots.txt handling of those pages to determine whether they are accidentally being blocked by a misconfiguration.

- Are pages being demoted? If the index page is suddenly not in the top spot, something is probably wrong. Running this simple check recently, we noticed our preferred URL handling was causing our index page’s canonical to update to a page that 301 redirects back in a loop. Google had demoted this page on the site: query, but Bing didn’t. The problem was solved just by running that simple check and looking into the problematic page.

- Are there pages you don’t recognize? Do those pages look like something as simple as a misconfigured setting in your content management system (CMS) that allows strange indexation, or are these pages off-theme and spammy? The former is likely a mistake; the latter is probably an attack.

- Perform some branded queries. Search for domain.ltd, domain and other popular or normal phrases associated with your brand. Are you suddenly not ranking for them as you previously were? If not, were you overtaken by any suspicious results?

Raw weblogs

Having access to your raw weblogs is vital, but unfortunately, it is going to be made significantly more difficult with broader adoption of General Data Protection Regulation (GDPR).

It is vital that you can access internet protocols (IPs) recorded in each of the pages visited on your site, including those you may not have the Google Analytics tracking code on. By parsing your logs, you can:

- Find IPs. This determines if the same group of IPs has been probing your site for a configuration weakness.

- Identify scrapers. Know if scrapers have been attempting to pull down content en masse.

- Identify response issues. If you’re having a number of server response issues where you wouldn’t expect to see them, you will now.

Many issues can be solved if you have access and the inclination to parse your logs for patterns. It’s time-consuming but worth doing.

Google Analytics

This could be its own series, as there are a multitude of areas to focus on within any sophisticated analytics package. However, let’s focus on some of the more obvious pieces:

- Bounce rate. Has it been trending up or down? How does this correspond with what you’re seeing in your raw logs. Is Google filtering out some of the bouncing traffic? Is the bounce rate showing any outliers when segmenting by channel (source), by browser or by geographic location?

- Session duration. Similar to bounce rate, for user signal purposes, are the sessions becoming abbreviated? Especially if also accompanied by an increase in overall sessions?

- All traffic channels and all traffic referrals. Are any sources now sending significantly more or less traffic when compared to periods in which your rankings were better? Are there unusual sources of traffic coming in that seem fake? Both are issues to research when you suspect negative SEO.

- Search console and landing pages. Similar to the Search Analytics check on Google Search Console itself, are there aberrations in which pages are now getting traffic, or are you seeing a large change in bounce and session duration on the pages you care about?

- Site speed. All things being equal, a faster site is a better site. Has the load time been increasing? Is it specifically increasing on Chrome? For specific pages? Are those pages that appeared benign ones that you didn’t previously recognize?

Google Search Console

Google Search Console

What should you be looking for in Google Search Console (GSC) to help you determine if you’ve been a victim of negative SEO?

- Messages. If there is a massive change that Google wants to inform you about, such as a manual action due to incoming or external links, crawl problems or accessibility issues, messages in your GSC are the first place to look. If Google thinks you’ve been hacked, it will let you know.

- Search analytics. Looking at your queries over time, you can sometimes spot an issue. For instance, if query volumes associated with your branded and important phrases spike, did you see an uptick in clicks to your pages? If not, this could be an attempt to impact one user signal. Are your less important pages being sourced on the queries you care about? This could instead point to an issue with your content and content architecture.

- Links to your site. The obvious thing to look for is a large influx of low-quality, spammy links. But are they bad links? If I cherry-pick some of the worst links and see that they are blocking AhrefsBot, this tells me they are probably spammy links which need to go.

- Internal links. What pages are you linking to heavily that you didn’t realize you were linking to? It could be a navigational issue, or it could be a situation where you’re linking to a spammy doorway page injected into your CMS.

- Manual actions. It should also exist in messages, but if you have a manual action, you need to address it immediately. It doesn’t matter what the reasons are; even if there was a legitimate attack on your site, you must fix it right away.

- Crawl errors. Rule out a ranking drop due to malicious intent by checking on the stability of your setup. If your server is throwing too many 500 responses, Google will crawl it less. If this occurs, chances are users are going to encounter more issues with your web pages, and it will slip in the rankings as RankBrain folds in the user data. If you pair this with raw weblog data, you might see if the server instability is due to an attack.

Bing Webmaster Tools

How can you determine what’s going on with your Bing rankings? Head to their webmaster tools and check for the following:

- Site activity. Similar to Search Analytics data from Google, Bing Webmaster Tools allow you to quickly assess whether your site is showing more or less frequently in search as a whole, whether click volume has changed, if there’s been a change on crawling and crawl errors, and, of course, pages indexed. You can give each section a deeper look.

- Inbound links. Just as with Google Search Console, you can see how these links look. Are they unexpected at all? Can they be found in different link analysis tools?

Link analysis tool

Using your favorite link analysis tool, look at these points to determine if you’ve been hit with negative SEO:

- Organic keywords. Do you see a general trend in rankings? This should roughly match with the search analytics data from Google, but not always. Chances are you already know by this point that something is awry, but looking at the same data through a different visualization can determine if there is a problem.

- New backlinks and new domains and referring IPs. If you’re experiencing an attack, this is where you most likely will find it, if you see a large increase in links that you did not commission and do not want. Look at both of these reports, because having 2,000 pages on the same domain link to you is viewed differently from 2,000 new domains linking to you. In some cases, you may also find a large number of domains linking from the same IP. It’s a lazy negative SEO tactic, but it’s one of the more common ones.

- Lost backlinks and lost domains. Another vector of negative SEO is getting a competitor’s links removed. Are you losing links you previously worked hard to secure? You might need to reach out to those webmasters to find out why. Are pages linking to you suddenly inaccessible and now linking to a competitor? Or not linking to you at all? You need to find out why.

- Broken backlinks. Sometimes a linking issue is your own. If you recently moved a site, made an architecture change or even updated a plug-in, you might have inadvertently caused a page to go offline, which results in lost link equity. Fixing this is as simple as bringing back the lost pages or redirecting the pages to a relevant page to capture a large percentage of the initial link equity.

- Anchors. Not enough time is focused here, even though over-optimization penalties and filters still exist. Did the change in links change your anchor text distribution, putting your commercial phrases in an unhealthy range? Are the overall phrases still looking OK, but the singular terms appear to be more targeted than previously? Are you getting a lot of incoming phrases that you would rather not be associated with?

- Outgoing linked domains and outgoing broken links. It is healthy to look to see if you are now perceived to be linking to areas where you did not wish to be linking and to check to ensure that those you wanted to be linking to still resolve as valid URLs. Injections to a CMS, indexed comments and other user-generated content (UGC)-type areas should be looked at.

Crawling and technical tools

Similar to the section on link analysis, if you have a favorite crawling and technical SEO tool, use that in your approach to determine if you’ve been hit by negative SEO.

- Site speed. How does the crawl site speed compare to what is found in Google Analytics or on individually run Google site speed tests? Are you being hampered by a large resource attempting to slow you down?

- Indexation status by depth. This is where manipulating your CMS setup and web architecture can really hurt if you’re suddenly duplicating or indexing a large percentage of pages, to the detriment of pages you do want to have indexed.

- Redirects. Namely, are you susceptible to open redirects which are leaching off of your available link equity?

- Crawl mapping. Conceptually this can be very useful when attempting to determine if there are active pages you really don’t want and how internal link distribution may be affecting them. Are they all orphaned pages (i.e., they exist but are not internally linked to), or are they embedded into the site’s navigation?

- On-page technical factors. This is the most important part, as it pertains to determining whether the situation is a negative SEO attack or an internal mistake. Crawling tools can help you quickly find which pages are set to nofollow or noindex or are conflicted due to canonicalization problems.

Plagiarism tool

How unique is your content? There are other plagiarism checkers, but Copyscape is the most popular, and it is thorough.

- Check your entire site. The simplest way to check is to have a plagiarism service crawl your site and then attempt to find significant string matches on other web pages found in the Google and Bing index. If you’re the target of fake Digital Millennium Copyright Act (DMCA) requests or parasitic scrapers that are attempting to both copy you and outrank you on more authoritative domains, this will help you find such issues.

- Internal duplication. While most might assume that a competitor is attempting to scrape and replace them, the greater issue is internally duplicated content across a blog, across categorical and tag setups, and improper URL handling.

Wrap-up

Using a number of tools to determine if you’ve been hit by negative SEO is a good idea. They will help you find issues quickly and in detail. Knowing if you’ve been hit, and how, is crucial to help you respond and clean up the mess so you can move forward.

In the next installment of our Negative SEO series, we’ll tackle how to be proactive and prevent a negative SEO campaign.

[Article on Search Engine Land.]

Opinions expressed in this article are those of the guest author and not necessarily Marketing Land. Staff authors are listed here.

Marketing Land – Internet Marketing News, Strategies & Tips

(61)