Apple Encourages Audio Deepfakes To Ensure A User’s ‘Voice Is Never Lost’

An Apple feature on the iPhone allows users to create a voice that sounds like their own to use in FaceTime, Phone, and assistive communication apps.

In 2013, Ingham began using a wheelchair and in recent years, he has noticed changes in his voice.

According to Apple, it “means your voice is never lost.”

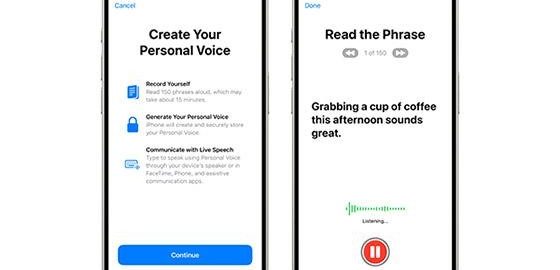

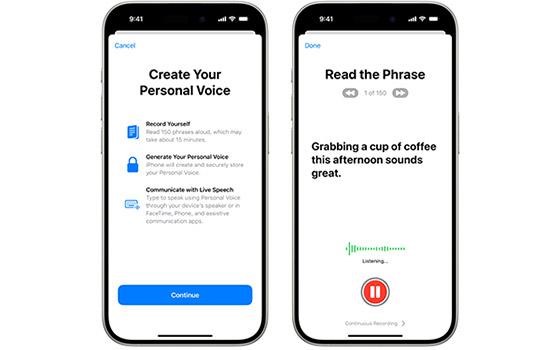

In a sense, the technology is considered a deepfake. The feature requires the user to setup the feature in the Personal Voice tap. It is available in select languages. Wikipedia defines an audio deepfake, also known as voice cloning, as using artificial intelligence (AI) “to create convincing speech sentences that sound like specific people.”

The video, The Lost Voice, markets the Personal Voice technology. In this case, Apple creates two versions of the marketing video. One is a standard video and the other has a narrator who tells the person viewing the video what they see. The second video could be for people with impaired vision.

The narrator explains that in the thicket of a forest a little girl stands, wearing a large medallion on a colored shirt. She opens it to reveal a compass. A large pink-and-white furry creature with a green, leafy shirt approaches asking, “why oh why creature are you so quiet? You’ve lost your voice.” The creature nods and says, “I’ll help you find it.”

The little girl talks throughout the video as the two search under trees and in bushes throughout the forest, looking for her voice.

It’s not until the viewer reaches the end of the video that the viewer meets Ingham, who from a wheelchair reads a story to his daughter through Personal Voice.

The video’s music, “Yodeler,” is from X Carbon.

In the future, Ingham may lose his speaking voice completely. “I find that by the end of a long day, just bringing up my voice gets a bit harder,” he tells Apple. “I had to give a conference presentation just last month, and it turned out that, on the day, I wasn’t able to deliver it because of my breathing. So I had to get someone else to present for me, even though I had written it.”

We hear and read a lot about deepfakes for nefarious reasons. In this case, Apple is using AI technology to help people with disabilities. The Personal Voice feature is launching in iOS 17, iPadOS 17, and macOS Sonoma.

Users create a voice that sounds like them by following a series of text prompts to capture 15 minutes of audio. With Personal Voice, Apple is able to train neural networks entirely on-device to advance speech accessibility while protecting users’ privacy.

Live Speech — another speech accessibility feature Apple released this fall — offers users the option to type what they want to say and have the phrase spoken aloud, whether it is in their Personal Voice or in any built-in system voice.

(2)