It takes a lot of traffic to get statistically significant results for A/B tests. High-traffic websites like Google and Facebook should A/B test everything, but low-traffic websites shouldn’t bother. If you’re a startup or a B2B company, you’re better off relying on qualitative feedback and intuition.

At least that’s how the argument goes. But I would like to offer a different perspective. I think low-traffic websites should A/B test, not only because doing so has enormous cultural benefits (such as democratizing decision-making), but also because A/B testing does work for low-traffic sites.

First, a story: In 2013, Optimizely was considering changing its pricing. As part of our research, we conducted surveys with prospective and actual customers, did 1 on 1 interviews, and even ran focus groups. We recorded all of this, and I watched all of the footage. In one of the focus groups, the facilitator hands out a copy of our old pricing page to the participants. One guy looks at the printout and says, angrily, “I hate this. Every time I see ‘contact sales’ I think I’m going to get taken for a ride, and so I’ll never click this button.”

The focus group didn’t end up producing useful pricing information, but it did lead to a test idea. What would happen if we changed the “contact sales” button on our pricing page to read “schedule demo”? The way our sales process worked at the time, our sales team demoed you in the first call, so the change of language wasn’t dishonest. Here were the results:

Clicks on ‘contact sales’ vs. ‘schedule demo’ results

As you can see, we observed a greater than 300% improvement in conversions (people who scheduled demos with our sales team) by this simple change in language. The page we ran this test on averaged 636 visits per day and we got statistically significant results within a week.

That test is also not an outlier. Here are the results of another test we ran more recently on our account creation modal dialog. The modal dialog received about 133 visits per day when we ran the test. In the test, we removed the enter password and confirm password fields, opting instead to auto-assign passwords, which users were forced to reset when they came back to Optimizely:

Accounts created successfully

Despite the low traffic, we got statistically significant results within a week, with an observed improvement of greater than 18% for one of our most important top of the funnel goals.

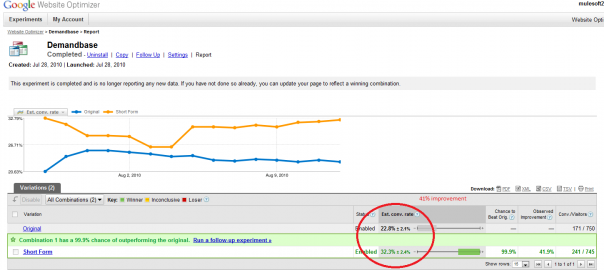

These results are not particular to Optimizely the company or the Optimizely platform. Here is a test I ran at MuleSoft in 2010 using Google Website Optimizer, an A/B testing product which has since been replaced by Google Content Experiments. MuleSoft (an integration platform) did receive substantial traffic at the time, but the particular page this test ran on received only about 75 visits per day. In the A/B test, I removed the select a state and country form fields and replaced them with GeoIP data. We got statistically significant results in less than two weeks, observing an improvement greater than 40%:

Results from an A/B test at MuleSoft (reprinted with permission from MuleSoft)

These tests moved the needle for core business metrics: demo requests, new accounts, new leads. Clearly, startups and B2B companies can achieve meaningful and statistically significant results on low-traffic pages. The key is to test things that will have a major impact. Explore before you refine. Try a major difference before an incremental improvement. Choose meaningful goals, and don’t complicate the test.

There is a story about Marissa Mayer testing 41 shades of blue for the hyperlinks at Google to find out which had the highest CTR. It is true that low-traffic websites cannot perform tests like this, where traffic is split among many variations and the minimum detectable effect is tiny, but it is not true that low-traffic sites can’t get real wins through A/B testing. They can – as you can see from these examples, A/B testing does work for low traffic sites!

A special thanks to Cara Harshman for her contributions to this blog post!

Digital & Social Articles on Business 2 Community(59)